WebRTC源码之音频设备的录制流程源码分析

WebRTC专题开嗨鸭 !!!一、 WebRTC 线程模型

2、WebRTC网络PhysicalSocketServer之WSAEventselect模型使用

二、 WebRTC媒体协商

2、WebRTC网络PhysicalSocketServer之WSAEventselect模型使用

3、WebRTC之证书(certificate)生成的时机分析

三、 WebRTC 音频数据采集

四、 WebRTC 音频引擎(编解码和3A算法)

五、 WebRTC 视频数据采集

六、 WebRTC 视频引擎( 编解码)

七、 WebRTC 网络传输

2、WebRTC的ICE之Dtls/SSL/TLSv1.x协议详解

八、 WebRTC服务质量(Qos)

3、WebRTC之NACK、RTX 在什么时机判断丢包发送NACK请求和RTX丢包重传

九、 NetEQ

十、 Simulcast与SVC

前言

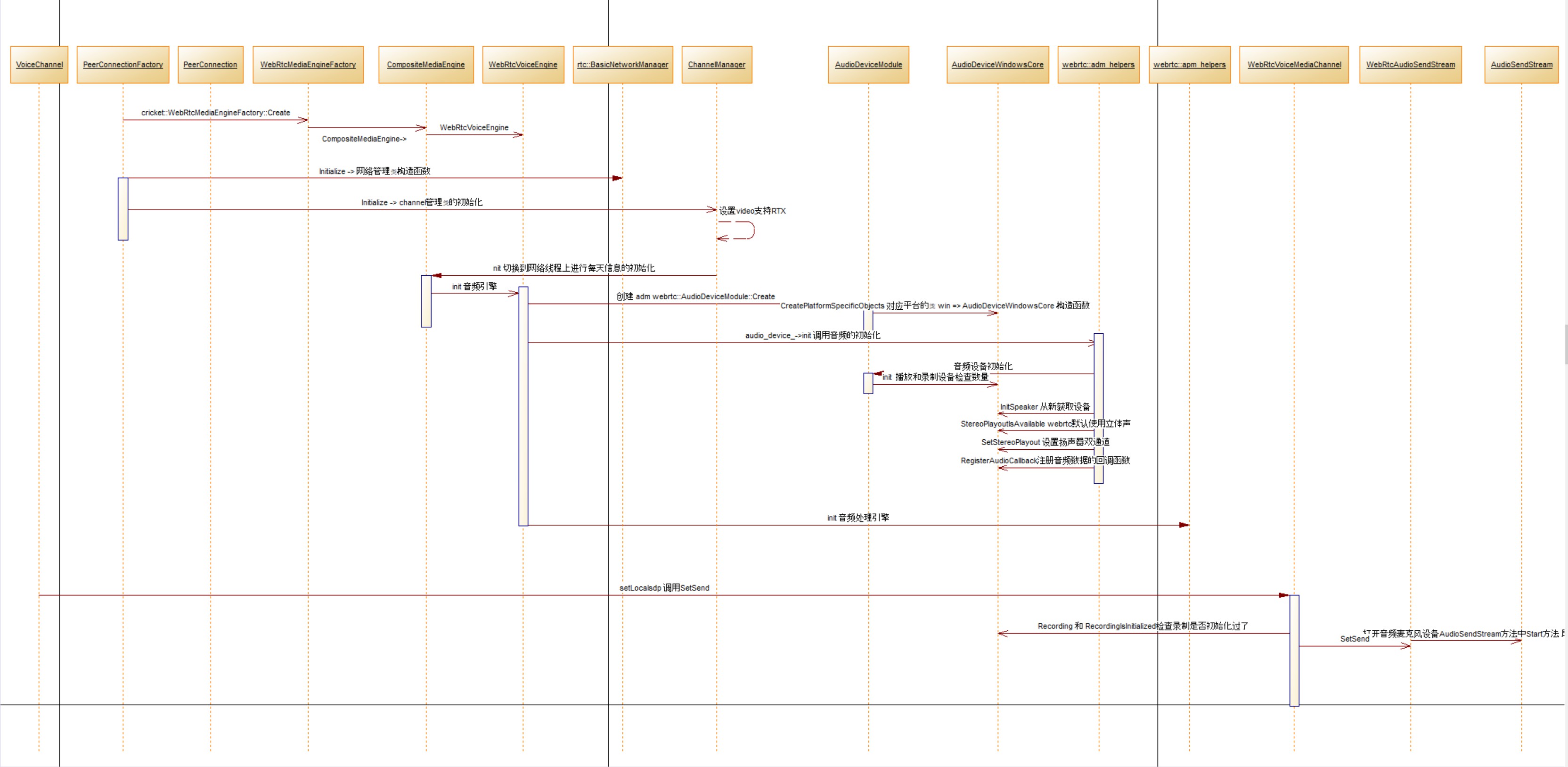

WebRTC音频引擎启动基本流程图

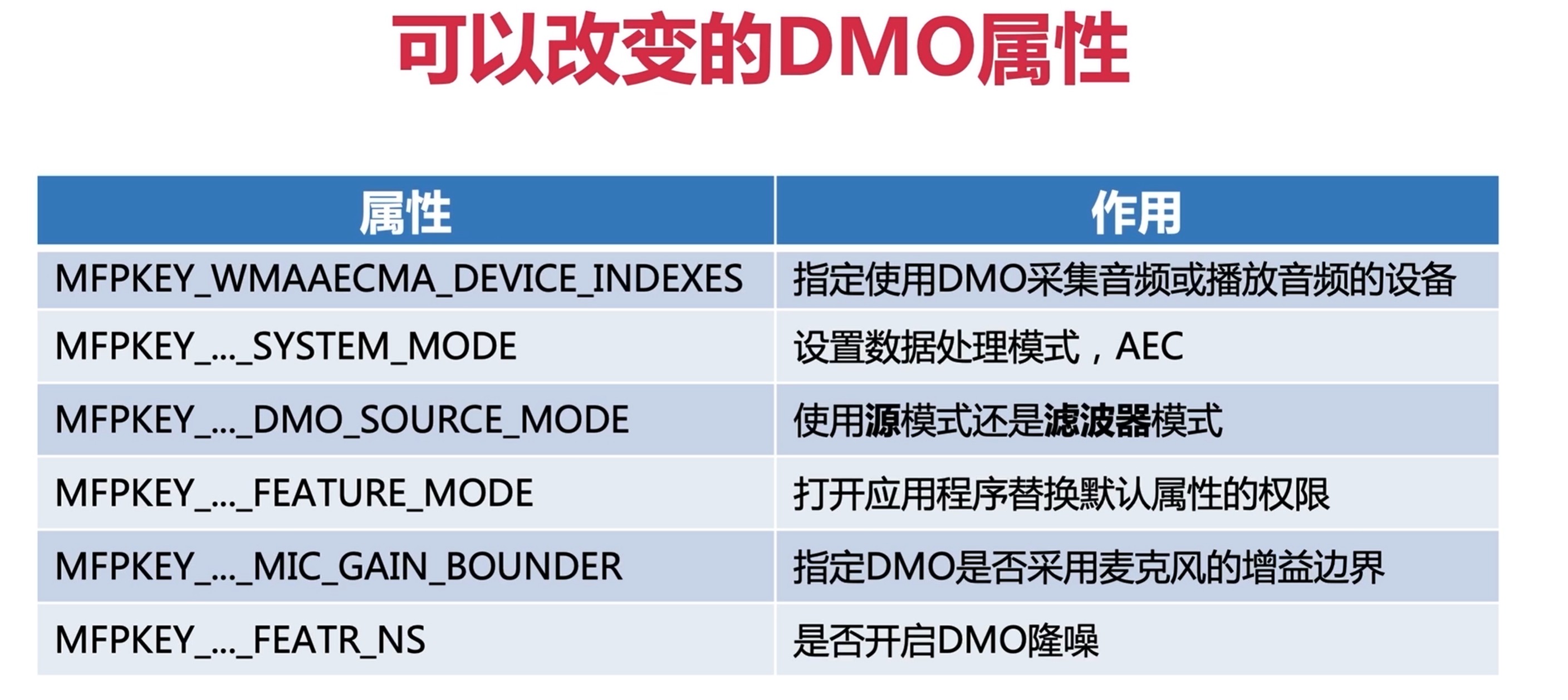

一、音频DMO组件

- AEC 音频采集 硬件回音消除

- 麦克风阵列处理

- 噪音抑制

- 自动增益控制

- 语言活动检查

二、使用音频DMO的基本步骤 、分为三大步骤

### 1、初始化DMO 和接口音频DMO支持两个接口

- 音频DMO只支持IMediaObject和IPropertyStore接口

- IMediaObject 的CocreateInstance创建、这也是初始化

- IPropertyStore由DMO的QueryInterface创建

区分IPropertyStore接口

IMMDevice也有该接口、使用OpenPropertyStore获得

DEMO使用 QueryInterface获取该接口

① 、DMO的两种操作模式

- 源模式、所有的操作都交于DMO管理、从DMO取数据即可

- Filter模式、需要应用层主动发音频数据到DMO

DMO的初始化代码中InitRecordingDMO方法中

// Capture initialization when the built-in AEC DirectX Media Object (DMO) is

// used. Called from InitRecording(), most of which is skipped over. The DMO

// handles device initialization itself.

// Reference: http://msdn.microsoft.com/en-us/library/ff819492(v=vs.85).aspx

int32_t AudioDeviceWindowsCore::InitRecordingDMO() {

assert(_builtInAecEnabled);

assert(_dmo != NULL);

if (SetDMOProperties() == -1) {

return -1;

}

/* TODO@chensong 20220806 音频媒体数据结构的介绍

typedef struct _DMOMediaType

{

GUID majortype; // 流的主类型 GUID

GUID subtype; // 流的子类型 GUID

BOOL bFixedSizeSamples; // 采样是否是固定大小、音频为TRUE

BOOL bTemporalCompression; // 时域压缩 [音视频 分为时域和频域 视频==》【时域:时间顺序继续压缩 帧间压缩】【频域: 帧内压缩】]

ULONG lSampleSize; // 以字节为单位的采样大小

GUID formattype; // 数据格式类型, 音频为WAVEFORMATEX

IUnknown *pUnk; // 未使用

ULONG cbFormat; // 不同媒体类型格式块的大小

[size_is] BYTE *pbFormat; // 根据cbFormat决定该字段

} DMO_MEDIA_TYPE;

*/

DMO_MEDIA_TYPE mt = {};

/*

函数功能 : 初始化DMO_MEDIA_TYPE变量

参数1 : 需要初始化的DMO_MEDIA_TYPE对象指针

参数2 : 分配的格式块占有字节数

*/

HRESULT hr = MoInitMediaType(&mt, sizeof(WAVEFORMATEX));

if (FAILED(hr)) {

MoFreeMediaType(&mt);

_TraceCOMError(hr);

return -1;

}

mt.majortype = MEDIATYPE_Audio;

mt.subtype = MEDIASUBTYPE_PCM;

mt.formattype = FORMAT_WaveFormatEx;

// Supported formats

// nChannels: 1 (in AEC-only mode)

// nSamplesPerSec: 8000, 11025, 16000, 22050

// wBitsPerSample: 16

WAVEFORMATEX* ptrWav = reinterpret_cast<WAVEFORMATEX*>(mt.pbFormat);

ptrWav->wFormatTag = WAVE_FORMAT_PCM;

ptrWav->nChannels = 1;

// 16000 is the highest we can support with our resampler.

ptrWav->nSamplesPerSec = 16000;

ptrWav->nAvgBytesPerSec = 32000;

ptrWav->nBlockAlign = 2;

ptrWav->wBitsPerSample = 16;

ptrWav->cbSize = 0;

// Set the VoE format equal to the AEC output format.

_recAudioFrameSize = ptrWav->nBlockAlign;

_recSampleRate = ptrWav->nSamplesPerSec;

_recBlockSize = ptrWav->nSamplesPerSec / 100;

_recChannels = ptrWav->nChannels;

// Set the DMO output format parameters.

// TODO@chensong 20220806 音频DMO设备接口参数说明

// param 1 : 输出源索引值, 从0开始

// param 2 : DMO_MEDIA_TYPE 类型指针

// param 3 : 按位的组合标记,设置为0

hr = _dmo->SetOutputType(kAecCaptureStreamIndex, &mt, 0);

MoFreeMediaType(&mt);

if (FAILED(hr)) {

_TraceCOMError(hr);

return -1;

}

if (_ptrAudioBuffer) {

_ptrAudioBuffer->SetRecordingSampleRate(_recSampleRate);

_ptrAudioBuffer->SetRecordingChannels(_recChannels);

} else {

// Refer to InitRecording() for comments.

RTC_LOG(LS_VERBOSE)

<< "AudioDeviceBuffer must be attached before streaming can start";

}

_mediaBuffer = new MediaBufferImpl(_recBlockSize * _recAudioFrameSize);

// Optional, but if called, must be after media types are set.

hr = _dmo->AllocateStreamingResources();

if (FAILED(hr)) {

_TraceCOMError(hr);

return -1;

}

_recIsInitialized = true;

RTC_LOG(LS_VERBOSE) << "Capture side is now initialized";

return 0;

}

也是在InitRecordingDMO方法中实现的

①、如果使用filter模式,我们需要设置数据的输入格式

②、如果使用source模式则不需要设置输入数据的格式

③、两者都需要设置输出数据格式

④、 SetOutputType方法

是在SetOutputType方法中实现的

// param 1 : 输出源索引值, 从0开始

// param 2 : DMO_MEDIA_TYPE 类型指针

// param 3 : 按位的组合标记,设置为0

virtual HRESULT STDMETHODCALLTYPE SetOutputType(

DWORD dwOutputStreamIndex,

/* [annotation][in] */

_In_opt_ const DMO_MEDIA_TYPE *pmt,

DWORD dwFlags) = 0;

// 结构体的介绍

/* TODO@chensong 20220806 音频媒体数据结构的介绍

typedef struct _DMOMediaType

{

GUID majortype; // 流的主类型 GUID

GUID subtype; // 流的子类型 GUID

BOOL bFixedSizeSamples; // 采样是否是固定大小、音频为TRUE

BOOL bTemporalCompression; // 时域压缩

ULONG lSampleSize; // 以字节为单位的采样大小

GUID formattype; // 数据格式类型, 音频为WAVEFORMATEX

IUnknown *pUnk; // 未使用

ULONG cbFormat; // 不同媒体类型格式块的大小

[size_is] BYTE *pbFormat; // 根据cbFormat决定该字段

} DMO_MEDIA_TYPE;

*/

⑤、DMO_MEDIA_TYPE说明

子类型: MEDIASUBTYPE_PCM、 MEDIASUBTYPE_FLOAT

数据块格式: WAVEFORMAT、WAVEFORMATEX

采样率: 8000/11025/16000/22050

Channel: 1 AEC、 2/4麦克风阵列

采样位数: 16

⑥、MoInitMediaType方法 初始化MDMO_MEDIA_TYPE变量

int AudioDeviceWindowsCore::SetDMOProperties() {

HRESULT hr = S_OK;

assert(_dmo != NULL);

rtc::scoped_refptr<IPropertyStore> ps;

{

IPropertyStore* ptrPS = NULL;

hr = _dmo->QueryInterface(IID_IPropertyStore,

reinterpret_cast<void**>(&ptrPS));

if (FAILED(hr) || ptrPS == NULL) {

_TraceCOMError(hr);

return -1;

}

ps = ptrPS;

SAFE_RELEASE(ptrPS);

}

// Set the AEC system mode.

// SINGLE_CHANNEL_AEC - AEC processing only.

// 修改DMO得属性 使用AEC模式

if (SetVtI4Property(ps, MFPKEY_WMAAECMA_SYSTEM_MODE, SINGLE_CHANNEL_AEC)) {

return -1;

}

// Set the AEC source mode.

// VARIANT_TRUE - Source mode (we poll the AEC for captured data).

// 工作模式得设置 使用filter模式

if (SetBoolProperty(ps, MFPKEY_WMAAECMA_DMO_SOURCE_MODE, VARIANT_TRUE) ==

-1) {

return -1;

}

// Enable the feature mode.

// This lets us override all the default processing settings below.

// 我们应用是否允许修改属性 可以修改得

if (SetBoolProperty(ps, MFPKEY_WMAAECMA_FEATURE_MODE, VARIANT_TRUE) == -1) {

return -1;

}

// Disable analog AGC (default enabled).

// 是否使用增益边界 WebRTC中使用增益

if (SetBoolProperty(ps, MFPKEY_WMAAECMA_MIC_GAIN_BOUNDER, VARIANT_FALSE) ==

-1) {

return -1;

}

// Disable noise suppression (default enabled).

// 0 - Disabled, 1 - Enabled

// 是否使用降燥 WebRTC中是使用自己得降燥模块得

if (SetVtI4Property(ps, MFPKEY_WMAAECMA_FEATR_NS, 0) == -1) {

return -1;

}

// Relevant parameters to leave at default settings:

// MFPKEY_WMAAECMA_FEATR_AGC - Digital AGC (disabled).

// MFPKEY_WMAAECMA_FEATR_CENTER_CLIP - AEC center clipping (enabled).

// MFPKEY_WMAAECMA_FEATR_ECHO_LENGTH - Filter length (256 ms).

// TODO(andrew): investigate decresing the length to 128 ms.

// MFPKEY_WMAAECMA_FEATR_FRAME_SIZE - Frame size (0).

// 0 is automatic; defaults to 160 samples (or 10 ms frames at the

// selected 16 kHz) as long as mic array processing is disabled.

// MFPKEY_WMAAECMA_FEATR_NOISE_FILL - Comfort noise (enabled).

// MFPKEY_WMAAECMA_FEATR_VAD - VAD (disabled).

// Set the devices selected by VoE. If using a default device, we need to

// search for the device index.

// 设置输入设备和输出设备的id

int inDevIndex = _inputDeviceIndex;

int outDevIndex = _outputDeviceIndex;

if (!_usingInputDeviceIndex) {

ERole role = eCommunications;

if (_inputDevice == AudioDeviceModule::kDefaultDevice) {

role = eConsole;

}

// 获取设备id

if (_GetDefaultDeviceIndex(eCapture, role, &inDevIndex) == -1) {

return -1;

}

}

if (!_usingOutputDeviceIndex) {

ERole role = eCommunications;

if (_outputDevice == AudioDeviceModule::kDefaultDevice) {

role = eConsole;

}

// 获取设备id

if (_GetDefaultDeviceIndex(eRender, role, &outDevIndex) == -1) {

return -1;

}

}

// 把输入设备和输出设备放到devIndex中

DWORD devIndex = static_cast<uint32_t>(outDevIndex << 16) +

static_cast<uint32_t>(0x0000ffff & inDevIndex);

RTC_LOG(LS_VERBOSE) << "Capture device index: " << inDevIndex

<< ", render device index: " << outDevIndex;

// 设置设备的值到DMO中去, DMO使用那个输入设备或者那个输出设备id

if (SetVtI4Property(ps, MFPKEY_WMAAECMA_DEVICE_INDEXES, devIndex) == -1) {

return -1;

}

return 0;

}

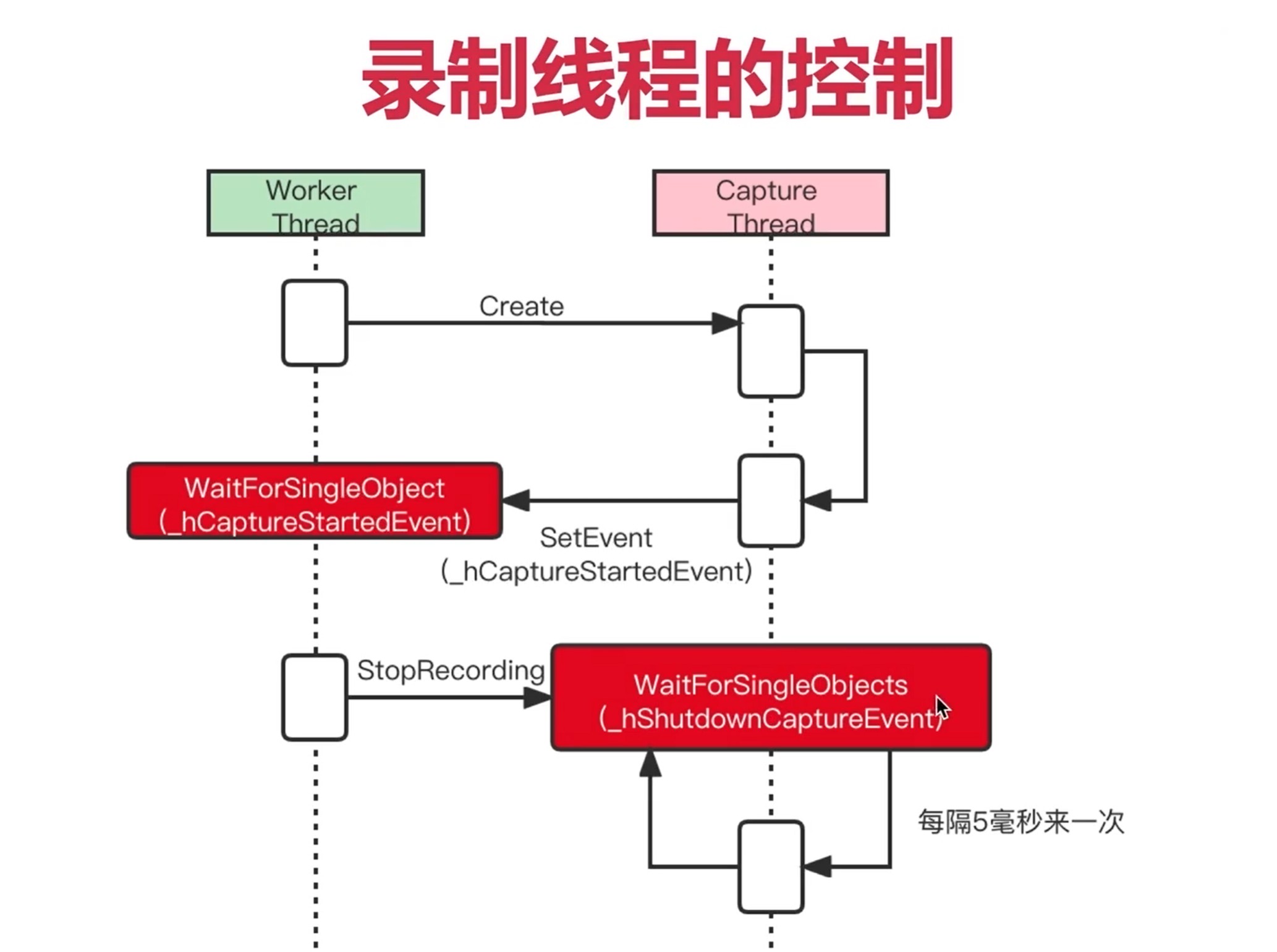

①、在处理数据前, 需要调用ALLocate Streaming Resource方法

②、AllocateStreamingResource 分配的资源由DMO内部使用

③、如果使用filter模式,需要调用ProcessInput向DMO传数据

获取输出数据

- 创建Buffer来获得输出数据、该Buffer实现了IMediaBuffer接口

- 清空DMO_OUTPUT_DATA_BUFFER中数据,防止有脏数据

- DMO_…_BUFFER中pBUffer成员指向你自己的Buffer

- 将DMO_…_BUFFER转给ProcessOut方法

- 通过上面步骤持续从DMO中获取数据

在[moudles/audio_device/win/audio_decvice_core.cc]DoCaptureThreadPollDMO中实现

DWORD AudioDeviceWindowsCore::DoCaptureThreadPollDMO() {

assert(_mediaBuffer != NULL);

bool keepRecording = true;

// Initialize COM as MTA in this thread.

ScopedCOMInitializer comInit(ScopedCOMInitializer::kMTA);

if (!comInit.succeeded()) {

RTC_LOG(LS_ERROR) << "failed to initialize COM in polling DMO thread";

return 1;

}

HRESULT hr = InitCaptureThreadPriority();

if (FAILED(hr)) {

return hr;

}

// Set event which will ensure that the calling thread modifies the

// recording state to true.

SetEvent(_hCaptureStartedEvent);

// >> ---------------------------- THREAD LOOP ----------------------------

while (keepRecording) {

// Poll the DMO every 5 ms.

// (The same interval used in the Wave implementation.)

DWORD waitResult = WaitForSingleObject(_hShutdownCaptureEvent, 5);

switch (waitResult) {

case WAIT_OBJECT_0: // _hShutdownCaptureEvent

keepRecording = false;

break;

case WAIT_TIMEOUT: // timeout notification

break;

default: // unexpected error

RTC_LOG(LS_WARNING) << "Unknown wait termination on capture side";

hr = -1; // To signal an error callback.

keepRecording = false;

break;

}

while (keepRecording) {

rtc::CritScope critScoped(&_critSect);

DWORD dwStatus = 0;

{

/*

typedef struct _DMO_OUTPUT_DATA_BUFFER

{

IMediaBuffer *pBuffer; // 指向由应用分配的支持IMediaBuffer接口的BUffer

DWORD dwStatus; // 处理输出后, DMO修改该标记

REFERENCE_TIME rtTimestamp; // 指明数据在该BUffer中的开始时间戳

REFERENCE_TIME rtTimelength; // 指定BUffer中数据长度的参考时间

} DMO_OUTPUT_DATA_BUFFER;

*/

DMO_OUTPUT_DATA_BUFFER dmoBuffer = {0};

dmoBuffer.pBuffer = _mediaBuffer;

dmoBuffer.pBuffer->AddRef();

// Poll the DMO for AEC processed capture data. The DMO will

// copy available data to |dmoBuffer|, and should only return

// 10 ms frames. The value of |dwStatus| should be ignored.

hr = _dmo->ProcessOutput(0, 1, &dmoBuffer, &dwStatus);

SAFE_RELEASE(dmoBuffer.pBuffer);

dwStatus = dmoBuffer.dwStatus;

}

if (FAILED(hr)) {

_TraceCOMError(hr);

keepRecording = false;

assert(false);

break;

}

ULONG bytesProduced = 0;

BYTE* data;

// Get a pointer to the data buffer. This should be valid until

// the next call to ProcessOutput.

hr = _mediaBuffer->GetBufferAndLength(&data, &bytesProduced);

if (FAILED(hr)) {

_TraceCOMError(hr);

keepRecording = false;

assert(false);

break;

}

if (bytesProduced > 0) {

const int kSamplesProduced = bytesProduced / _recAudioFrameSize;

// TODO(andrew): verify that this is always satisfied. It might

// be that ProcessOutput will try to return more than 10 ms if

// we fail to call it frequently enough.

assert(kSamplesProduced == static_cast<int>(_recBlockSize));

assert(sizeof(BYTE) == sizeof(int8_t));

_ptrAudioBuffer->SetRecordedBuffer(reinterpret_cast<int8_t*>(data),

kSamplesProduced);

_ptrAudioBuffer->SetVQEData(0, 0);

_UnLock(); // Release lock while making the callback.

_ptrAudioBuffer->DeliverRecordedData();

_Lock();

}

// Reset length to indicate buffer availability.

hr = _mediaBuffer->SetLength(0);

if (FAILED(hr)) {

_TraceCOMError(hr);

keepRecording = false;

assert(false);

break;

}

if (!(dwStatus & DMO_OUTPUT_DATA_BUFFERF_INCOMPLETE)) {

// The DMO cannot currently produce more data. This is the

// normal case; otherwise it means the DMO had more than 10 ms

// of data available and ProcessOutput should be called again.

break;

}

}

}

// ---------------------------- THREAD LOOP ---------------------------- <<

RevertCaptureThreadPriority();

if (FAILED(hr)) {

RTC_LOG(LS_ERROR)

<< "Recording error: capturing thread has ended prematurely";

} else {

RTC_LOG(LS_VERBOSE) << "Capturing thread is now terminated properly";

}

return hr;

}

总结:

WebRTC源码分析地址:https://github.com/chensongpoixs/cwebrtc