=====================================================

redis源码学习系列文章:

redis源码分析之内存编码分析intset, ziplist编码分析

redis源码分析之对象系统源码分析string, list链表,hash哈希,set集合,zset有序集合

redis源码分析之异步进程保存数据rdb文件和aof文件源码分析

redis源码分析之集群之一的槽的分配算法crc16原理分析

=====================================================

在我的github上会持续更新Redis代码的中文分析,地址送出https://github.com/chensongpoixs/credis_source,共同学习进步

前言

redis中高可用模型 :使用Raft一致性算法比较简单,

正文

1, 整体一个流程图

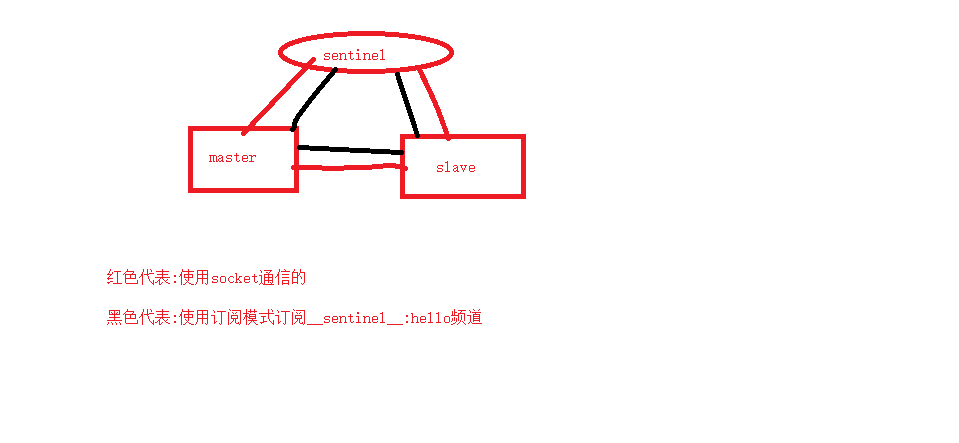

2, sentinel与master,slave服务的通信

sentinel服务主要存在在定时器sentinelTimer方法中执行的的流程

sentinelHandleDictOfRedisInstances->sentinelHandleRedisInstance->sentinelReconnectInstance

①,连接master

在sentinelReconnectInstance方法找那个连接master服务器并且订阅”sentinel:hello”频道

/* Create the async connections for the instance link if the link

* is disconnected. Note that link->disconnected is true even if just

* one of the two links (commands and pub/sub) is missing. */

/**

* sentinel 异步连接master 服务的操作 和订阅hello的操作

* @param ri masters服务器的的信息

*/

void sentinelReconnectInstance(sentinelRedisInstance *ri) {

if (ri->link->disconnected == 0) return;

if (ri->addr->port == 0) return; /* port == 0 means invalid address. */

instanceLink *link = ri->link;

mstime_t now = mstime();

//从连的时间的秒数

if (now - ri->link->last_reconn_time < SENTINEL_PING_PERIOD)

{

return;

}

ri->link->last_reconn_time = now;

/* Commands connection. */

if (link->cc == NULL) {

link->cc = redisAsyncConnectBind(ri->addr->ip,ri->addr->port,NET_FIRST_BIND_ADDR);

if (link->cc->err) {

sentinelEvent(LL_DEBUG,"-cmd-link-reconnection",ri,"%@ #%s", link->cc->errstr);

instanceLinkCloseConnection(link,link->cc);

} else {

link->pending_commands = 0;

link->cc_conn_time = mstime();

link->cc->data = link;

// 设置回调函数处理

// 这里相当于 net init的操作 --> 现在要找到startup net的函数

redisAeAttach(server.el,link->cc);

//连接上回函数 注册写入事件 hiredis

redisAsyncSetConnectCallback(link->cc, sentinelLinkEstablishedCallback);

redisAsyncSetDisconnectCallback(link->cc, sentinelDisconnectCallback);

sentinelSendAuthIfNeeded(ri,link->cc);

sentinelSetClientName(ri,link->cc,"cmd");

/* Send a PING ASAP when reconnecting. */

sentinelSendPing(ri);

}

}

/* Pub / Sub */

if ((ri->flags & (SRI_MASTER|SRI_SLAVE)) && link->pc == NULL) {

link->pc = redisAsyncConnectBind(ri->addr->ip,ri->addr->port,NET_FIRST_BIND_ADDR);

if (link->pc->err) {

sentinelEvent(LL_DEBUG,"-pubsub-link-reconnection",ri,"%@ #%s",

link->pc->errstr);

instanceLinkCloseConnection(link,link->pc);

} else {

int retval;

link->pc_conn_time = mstime();

link->pc->data = link;

redisAeAttach(server.el,link->pc);

// 身份验证

redisAsyncSetConnectCallback(link->pc, sentinelLinkEstablishedCallback);

redisAsyncSetDisconnectCallback(link->pc, sentinelDisconnectCallback);

sentinelSendAuthIfNeeded(ri,link->pc);

sentinelSetClientName(ri,link->pc,"pubsub");

/* Now we subscribe to the Sentinels "Hello" channel. */

// 订阅 hello的操作

retval = redisAsyncCommand(link->pc, sentinelReceiveHelloMessages, ri, "%s %s", sentinelInstanceMapCommand(ri,"SUBSCRIBE"), SENTINEL_HELLO_CHANNEL);

if (retval != C_OK) {

/* If we can't subscribe, the Pub/Sub connection is useless

* and we can simply disconnect it and try again. */

instanceLinkCloseConnection(link,link->pc);

return;

}

}

}

/* Clear the disconnected status only if we have both the connections

* (or just the commands connection if this is a sentinel instance). */

if (link->cc && (ri->flags & SRI_SENTINEL || link->pc))

link->disconnected = 0;

}

② 获取master服务下属从服务salve服务的信息

sentinelSendPeriodicCommands方法中发送info命令获取从服务的基本的信息返回信息连接下属从服务函数sentinelRefreshInstanceInfo方法

/* Send periodic PING, INFO, and PUBLISH to the Hello channel to

* the specified master or slave instance. */

/**

* sentinel 服务的推送信息的处理 master slave 操作

* sentinel服务回去master的的信息 info的操作

* @param ri

*/

void sentinelSendPeriodicCommands(sentinelRedisInstance *ri) {

mstime_t now = mstime();

mstime_t info_period, ping_period;

int retval;

/* Return ASAP if we have already a PING or INFO already pending, or

* in the case the instance is not properly connected. */

if (ri->link->disconnected)

{

return;

}

/* For INFO, PING, PUBLISH that are not critical commands to send we

* also have a limit of SENTINEL_MAX_PENDING_COMMANDS. We don't

* want to use a lot of memory just because a link is not working

* properly (note that anyway there is a redundant protection about this,

* that is, the link will be disconnected and reconnected if a long

* timeout condition is detected. */

if (ri->link->pending_commands >= SENTINEL_MAX_PENDING_COMMANDS * ri->link->refcount)

{

return;

}

/* If this is a slave of a master in O_DOWN condition we start sending

* it INFO every second, instead of the usual SENTINEL_INFO_PERIOD

* period. In this state we want to closely monitor slaves in case they

* are turned into masters by another Sentinel, or by the sysadmin.

*

* Similarly we monitor the INFO output more often if the slave reports

* to be disconnected from the master, so that we can have a fresh

* disconnection time figure. */

if ((ri->flags & SRI_SLAVE) && ((ri->master->flags & (SRI_O_DOWN|SRI_FAILOVER_IN_PROGRESS)) || (ri->master_link_down_time != 0)))

{

info_period = 1000;

}

else

{

info_period = SENTINEL_INFO_PERIOD;

}

/* We ping instances every time the last received pong is older than

* the configured 'down-after-milliseconds' time, but every second

* anyway if 'down-after-milliseconds' is greater than 1 second. */

ping_period = ri->down_after_period;

if (ping_period > SENTINEL_PING_PERIOD)

{

ping_period = SENTINEL_PING_PERIOD;

}

//redis中处理write回调函数使用列表维护的了 需要我们可以 使用是有序的处理的

/* Send INFO to masters and slaves, not sentinels. */

if ((ri->flags & SRI_SENTINEL) == 0 && (ri->info_refresh == 0 || (now - ri->info_refresh) > info_period))

{

retval = redisAsyncCommand(ri->link->cc, sentinelInfoReplyCallback, ri, "%s", sentinelInstanceMapCommand(ri,"INFO"));

if (retval == C_OK)

{

ri->link->pending_commands++;

}

}

/* Send PING to all the three kinds of instances. */

// 网络传输时网络上耗时 网络延迟 发送 <--> 接收

if ((now - ri->link->last_pong_time) > ping_period && (now - ri->link->last_ping_time) > ping_period/2)

{

sentinelSendPing(ri);

}

/* PUBLISH hello messages to all the three kinds of instances. */

if ((now - ri->last_pub_time) > SENTINEL_PUBLISH_PERIOD)

{

sentinelSendHello(ri);

}

}

3, sentinel与sentinel服务的通信

sentinel从hello频道获取其他的sentinel的信息的 在sentinelProcessHelloMessage方法中保存其他的sentinel服务的基本信息 中createSentinelRedisInstance创建的新的sentinel服务的信息保存

/**

* 订阅信息的解析的工作

* @param hello 订阅的频道

* @param hello_len 长度

*/

void sentinelProcessHelloMessage(char *hello, int hello_len) {

/* Format is composed of 8 tokens:

* 0=ip,1=port,2=runid,3=current_epoch,4=master_name,

* 5=master_ip,6=master_port,7=master_config_epoch. */

int numtokens, port, removed, master_port;

uint64_t current_epoch, master_config_epoch;

// 127.0.0.1,26380,ba056b537690d37c699f4b482154a684ed6b4d4b,4,mymaster,127.0.0.1,6379,0

char **token = sdssplitlen(hello, hello_len, ",", 1, &numtokens);

sentinelRedisInstance *si, *master;

if (numtokens == 8) {

/* Obtain a reference to the master this hello message is about */

master = sentinelGetMasterByName(token[4]);

//当前sentinel中没有master时服务的信息的 为什么要不处理呢???

if (!master)

{

goto cleanup; /* Unknown master, skip the message. */

}

/* First, try to see if we already have this sentinel. */

port = atoi(token[1]);

master_port = atoi(token[6]);

//当前sentinel的服务器没有其他的sentinel服务的信息的需要存储下来的

si = getSentinelRedisInstanceByAddrAndRunID( master->sentinels,token[0],port,token[2]);

current_epoch = strtoull(token[3],NULL,10);

master_config_epoch = strtoull(token[7],NULL,10);

if (!si) {

/* If not, remove all the sentinels that have the same runid

* because there was an address change, and add the same Sentinel

* with the new address back. */

removed = removeMatchingSentinelFromMaster(master,token[2]);

if (removed) {

sentinelEvent(LL_NOTICE,"+sentinel-address-switch",master,

"%@ ip %s port %d for %s", token[0],port,token[2]);

} else {

/* Check if there is another Sentinel with the same address this

* new one is reporting. What we do if this happens is to set its

* port to 0, to signal the address is invalid. We'll update it

* later if we get an HELLO message. */

sentinelRedisInstance *other = getSentinelRedisInstanceByAddrAndRunID(master->sentinels, token[0],port,NULL);

if (other) {

sentinelEvent(LL_NOTICE,"+sentinel-invalid-addr",other,"%@");

other->addr->port = 0; /* It means: invalid address. */

sentinelUpdateSentinelAddressInAllMasters(other);

}

}

/* Add the new sentinel. */

si = createSentinelRedisInstance(token[2],SRI_SENTINEL, token[0],port,master->quorum,master);

if (si) {

if (!removed)

{

sentinelEvent(LL_NOTICE,"+sentinel",si,"%@");

}

/* The runid is NULL after a new instance creation and

* for Sentinels we don't have a later chance to fill it,

* so do it now. */

si->runid = sdsnew(token[2]);

sentinelTryConnectionSharing(si);

if (removed)

{

sentinelUpdateSentinelAddressInAllMasters(si);

}

sentinelFlushConfig();

}

}

/* Update local current_epoch if received current_epoch is greater.*/

if (current_epoch > sentinel.current_epoch) {

sentinel.current_epoch = current_epoch;

sentinelFlushConfig();

sentinelEvent(LL_WARNING,"+new-epoch",master,"%llu",

(unsigned long long) sentinel.current_epoch);

}

/* Update master info if received configuration is newer. */

if (si && master->config_epoch < master_config_epoch) {

master->config_epoch = master_config_epoch;

if (master_port != master->addr->port ||

strcmp(master->addr->ip, token[5]))

{

sentinelAddr *old_addr;

sentinelEvent(LL_WARNING,"+config-update-from",si,"%@");

sentinelEvent(LL_WARNING,"+switch-master", master,"%s %s %d %s %d", master->name, master->addr->ip, master->addr->port, token[5], master_port);

old_addr = dupSentinelAddr(master->addr);

sentinelResetMasterAndChangeAddress(master, token[5], master_port);

sentinelCallClientReconfScript(master, SENTINEL_OBSERVER,"start", old_addr,master->addr);

releaseSentinelAddr(old_addr);

}

}

/* Update the state of the Sentinel. */

if (si) si->last_hello_time = mstime();

}

cleanup:

sdsfreesplitres(token,numtokens);

}

4, sentinel服务控制故障转移的流程

在sentinelCheckObjectivelyDown方法所有sentinel服务的纪元评估该master是否宕机了

把该master的状态修改成SRI_O_DOWN状态

修改master的状态sentinelStartFailoverIfNeeded 方法中sentinelStartFailover修改的成

/* Setup the master state to start a failover. */

void sentinelStartFailover(sentinelRedisInstance *master) {

serverAssert(master->flags & SRI_MASTER);

master->failover_state = SENTINEL_FAILOVER_STATE_WAIT_START;

master->flags |= SRI_FAILOVER_IN_PROGRESS;

// 在这边修改前区纪元增加++1 [非常重要一个步骤 用于分布式同步 sentinel协商重要一个步骤的]

master->failover_epoch = ++sentinel.current_epoch;

sentinelEvent(LL_WARNING,"+new-epoch",master,"%llu", (unsigned long long) sentinel.current_epoch);

sentinelEvent(LL_WARNING,"+try-failover",master,"%@");

master->failover_start_time = mstime()+rand()%SENTINEL_MAX_DESYNC;

master->failover_state_change_time = mstime();

}

在和其他sentinel服务选举中leader来从master服务中评估中slave转换master

在sentinel服务中选举leader服务在每次master服务宕机的都要从新选举出新的leader的sentinel服务

sentinel服务给其他sentinel服务发送 is-master-down-by-addr 命令 分布式一致性Raft算法

选举的规则是先到先是leader 是局部的leader 必须要大于大于全部sentinel服务的数量二分之一加1否则从新在选举的leader服务为止。

在sentinel服务故障转移的状态

switch(ri->failover_state) {

case SENTINEL_FAILOVER_STATE_WAIT_START:

sentinelFailoverWaitStart(ri);

break;

case SENTINEL_FAILOVER_STATE_SELECT_SLAVE:

sentinelFailoverSelectSlave(ri);

break;

case SENTINEL_FAILOVER_STATE_SEND_SLAVEOF_NOONE:

sentinelFailoverSendSlaveOfNoOne(ri);

break;

case SENTINEL_FAILOVER_STATE_WAIT_PROMOTION:

sentinelFailoverWaitPromotion(ri);

break;

case SENTINEL_FAILOVER_STATE_RECONF_SLAVES: //通知其他slave服务同步新的master

sentinelFailoverReconfNextSlave(ri);

break;

}

下面是sentinel服务代码

/* Is this instance down according to the configured quorum?

*

* Note that ODOWN is a weak quorum, it only means that enough Sentinels

* reported in a given time range that the instance was not reachable.

* However messages can be delayed so there are no strong guarantees about

* N instances agreeing at the same time about the down state. */

void sentinelCheckObjectivelyDown(sentinelRedisInstance *master) {

dictIterator *di;

dictEntry *de;

unsigned int quorum = 0, odown = 0;

if (master->flags & SRI_S_DOWN) {

/* Is down for enough sentinels? */

quorum = 1; /* the current sentinel. */

/* Count all the other sentinels. */

di = dictGetIterator(master->sentinels);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->flags & SRI_MASTER_DOWN) quorum++;

}

dictReleaseIterator(di);

if (quorum >= master->quorum) odown = 1;

}

/* Set the flag accordingly to the outcome. */

if (odown) {

if ((master->flags & SRI_O_DOWN) == 0) {

sentinelEvent(LL_WARNING,"+odown",master,"%@ #quorum %d/%d", quorum, master->quorum);

master->flags |= SRI_O_DOWN;

master->o_down_since_time = mstime();

}

} else {

if (master->flags & SRI_O_DOWN) {

sentinelEvent(LL_WARNING,"-odown",master,"%@");

master->flags &= ~SRI_O_DOWN;

}

}

}

/* Redis Sentinel implementation

*

* Copyright (c) 2009-2012, Salvatore Sanfilippo <antirez at gmail dot com>

* All rights reserved.

*

* Redistribution and use in source and binary forms, with or without

* modification, are permitted provided that the following conditions are met:

*

* * Redistributions of source code must retain the above copyright notice,

* this list of conditions and the following disclaimer.

* * Redistributions in binary form must reproduce the above copyright

* notice, this list of conditions and the following disclaimer in the

* documentation and/or other materials provided with the distribution.

* * Neither the name of Redis nor the names of its contributors may be used

* to endorse or promote products derived from this software without

* specific prior written permission.

*

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

* AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

* IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

* ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE

* LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

* CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

* SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

* INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

* CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

* ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

* POSSIBILITY OF SUCH DAMAGE.

*/

#include "server.h"

#include "hiredis.h"

#include "async.h"

#include <ctype.h>

#include <arpa/inet.h>

#include <sys/socket.h>

#include <sys/wait.h>

#include <fcntl.h>

extern char **environ;

#define REDIS_SENTINEL_PORT 26379

/* ======================== Sentinel global state =========================== */

/* Address object, used to describe an ip:port pair. */

typedef struct sentinelAddr {

char *ip;

int port;

} sentinelAddr;

/* A Sentinel Redis Instance object is monitoring. */

#define SRI_MASTER (1<<0)

#define SRI_SLAVE (1<<1)

#define SRI_SENTINEL (1<<2)

#define SRI_S_DOWN (1<<3) /* Subjectively down (no quorum). */

#define SRI_O_DOWN (1<<4) /* Objectively down (confirmed by others). */

#define SRI_MASTER_DOWN (1<<5) /* A Sentinel with this flag set thinks that its master is down. */

#define SRI_FAILOVER_IN_PROGRESS (1<<6) /* Failover is in progress for

this master. */

#define SRI_PROMOTED (1<<7) /* Slave selected for promotion. */

#define SRI_RECONF_SENT (1<<8) /* SLAVEOF <newmaster> sent. */

#define SRI_RECONF_INPROG (1<<9) /* Slave synchronization in progress. */

#define SRI_RECONF_DONE (1<<10) /* Slave synchronized with new master. */

#define SRI_FORCE_FAILOVER (1<<11) /* Force failover with master up. */

#define SRI_SCRIPT_KILL_SENT (1<<12) /* SCRIPT KILL already sent on -BUSY */

/* Note: times are in milliseconds. */

#define SENTINEL_INFO_PERIOD 10000

#define SENTINEL_PING_PERIOD 1000

#define SENTINEL_ASK_PERIOD 1000

#define SENTINEL_PUBLISH_PERIOD 2000

#define SENTINEL_DEFAULT_DOWN_AFTER 30000

#define SENTINEL_HELLO_CHANNEL "__sentinel__:hello"

#define SENTINEL_TILT_TRIGGER 2000

#define SENTINEL_TILT_PERIOD (SENTINEL_PING_PERIOD*30)

#define SENTINEL_DEFAULT_SLAVE_PRIORITY 100

#define SENTINEL_SLAVE_RECONF_TIMEOUT 10000

#define SENTINEL_DEFAULT_PARALLEL_SYNCS 1

#define SENTINEL_MIN_LINK_RECONNECT_PERIOD 15000

#define SENTINEL_DEFAULT_FAILOVER_TIMEOUT (60*3*1000)

#define SENTINEL_MAX_PENDING_COMMANDS 100

#define SENTINEL_ELECTION_TIMEOUT 10000

#define SENTINEL_MAX_DESYNC 1000

#define SENTINEL_DEFAULT_DENY_SCRIPTS_RECONFIG 1

/* Failover machine different states. */

#define SENTINEL_FAILOVER_STATE_NONE 0 /* No failover in progress. */

#define SENTINEL_FAILOVER_STATE_WAIT_START 1 /* Wait for failover_start_time*/

#define SENTINEL_FAILOVER_STATE_SELECT_SLAVE 2 /* Select slave to promote */

#define SENTINEL_FAILOVER_STATE_SEND_SLAVEOF_NOONE 3 /* Slave -> Master */

#define SENTINEL_FAILOVER_STATE_WAIT_PROMOTION 4 /* Wait slave to change role */

#define SENTINEL_FAILOVER_STATE_RECONF_SLAVES 5 /* SLAVEOF newmaster */

#define SENTINEL_FAILOVER_STATE_UPDATE_CONFIG 6 /* Monitor promoted slave. */

#define SENTINEL_MASTER_LINK_STATUS_UP 0

#define SENTINEL_MASTER_LINK_STATUS_DOWN 1

/* Generic flags that can be used with different functions.

* They use higher bits to avoid colliding with the function specific

* flags. */

#define SENTINEL_NO_FLAGS 0

#define SENTINEL_GENERATE_EVENT (1<<16)

#define SENTINEL_LEADER (1<<17)

#define SENTINEL_OBSERVER (1<<18)

/* Script execution flags and limits. */

#define SENTINEL_SCRIPT_NONE 0

#define SENTINEL_SCRIPT_RUNNING 1

#define SENTINEL_SCRIPT_MAX_QUEUE 256

#define SENTINEL_SCRIPT_MAX_RUNNING 16

#define SENTINEL_SCRIPT_MAX_RUNTIME 60000 /* 60 seconds max exec time. */

#define SENTINEL_SCRIPT_MAX_RETRY 10

#define SENTINEL_SCRIPT_RETRY_DELAY 30000 /* 30 seconds between retries. */

/* SENTINEL SIMULATE-FAILURE command flags. */

#define SENTINEL_SIMFAILURE_NONE 0

#define SENTINEL_SIMFAILURE_CRASH_AFTER_ELECTION (1<<0)

#define SENTINEL_SIMFAILURE_CRASH_AFTER_PROMOTION (1<<1)

/* The link to a sentinelRedisInstance. When we have the same set of Sentinels

* monitoring many masters, we have different instances representing the

* same Sentinels, one per master, and we need to share the hiredis connections

* among them. Oherwise if 5 Sentinels are monitoring 100 masters we create

* 500 outgoing connections instead of 5.

*

* So this structure represents a reference counted link in terms of the two

* hiredis connections for commands and Pub/Sub, and the fields needed for

* failure detection, since the ping/pong time are now local to the link: if

* the link is available, the instance is avaialbe. This way we don't just

* have 5 connections instead of 500, we also send 5 pings instead of 500.

*

* Links are shared only for Sentinels: master and slave instances have

* a link with refcount = 1, always. */

typedef struct instanceLink {

int refcount; /* Number of sentinelRedisInstance owners. */

int disconnected; /* Non-zero if we need to reconnect cc or pc. */

int pending_commands; /* Number of commands sent waiting for a reply. */

redisAsyncContext *cc; /* Hiredis context for commands. */

redisAsyncContext *pc; /* Hiredis context for Pub / Sub. */

mstime_t cc_conn_time; /* cc connection time. */

mstime_t pc_conn_time; /* pc connection time. */

mstime_t pc_last_activity; /* Last time we received any message. */

mstime_t last_avail_time; /* Last time the instance replied to ping with

a reply we consider valid. */

mstime_t act_ping_time; /* Time at which the last pending ping (no pong

received after it) was sent. This field is

set to 0 when a pong is received, and set again

to the current time if the value is 0 and a new

ping is sent. */

mstime_t last_ping_time; /* Time at which we sent the last ping. This is

only used to avoid sending too many pings

during failure. Idle time is computed using

the act_ping_time field. */

mstime_t last_pong_time; /* Last time the instance replied to ping,

whatever the reply was. That's used to check

if the link is idle and must be reconnected. */

mstime_t last_reconn_time; /* Last reconnection attempt performed when

the link was down. */

} instanceLink;

typedef struct sentinelRedisInstance {

int flags; /* See SRI_... defines */

char *name; /* Master name from the point of view of this sentinel. */

char *runid; /* Run ID of this instance, or unique ID if is a Sentinel.*/

uint64_t config_epoch; /* Configuration epoch. */

sentinelAddr *addr; /* Master host. */

instanceLink *link; /* Link to the instance, may be shared for Sentinels. */

mstime_t last_pub_time; /* Last time we sent hello via Pub/Sub. */

mstime_t last_hello_time; /* Only used if SRI_SENTINEL is set. Last time

we received a hello from this Sentinel

via Pub/Sub. */

mstime_t last_master_down_reply_time; /* Time of last reply to

SENTINEL is-master-down command. */

mstime_t s_down_since_time; /* Subjectively down since time. */

mstime_t o_down_since_time; /* Objectively down since time. */

mstime_t down_after_period; /* Consider it down after that period. */

mstime_t info_refresh; /* Time at which we received INFO output from it. */

dict *renamed_commands; /* Commands renamed in this instance:

Sentinel will use the alternative commands

mapped on this table to send things like

SLAVEOF, CONFING, INFO, ... */

/* Role and the first time we observed it.

* This is useful in order to delay replacing what the instance reports

* with our own configuration. We need to always wait some time in order

* to give a chance to the leader to report the new configuration before

* we do silly things. */

int role_reported;

mstime_t role_reported_time;

mstime_t slave_conf_change_time; /* Last time slave master addr changed. */

/* Master specific. */

dict *sentinels; /* Other sentinels monitoring the same master. */

dict *slaves; /* Slaves for this master instance. */

unsigned int quorum;/* Number of sentinels that need to agree on failure. */

int parallel_syncs; /* How many slaves to reconfigure at same time. */

char *auth_pass; /* Password to use for AUTH against master & slaves. */

/* Slave specific. */

mstime_t master_link_down_time; /* Slave replication link down time. */

int slave_priority; /* Slave priority according to its INFO output. */

mstime_t slave_reconf_sent_time; /* Time at which we sent SLAVE OF <new> */

struct sentinelRedisInstance *master; /* Master instance if it's slave. */

char *slave_master_host; /* Master host as reported by INFO */

int slave_master_port; /* Master port as reported by INFO */

int slave_master_link_status; /* Master link status as reported by INFO */

unsigned long long slave_repl_offset; /* Slave replication offset. */

/* Failover */

char *leader; /* If this is a master instance, this is the runid of

the Sentinel that should perform the failover. If

this is a Sentinel, this is the runid of the Sentinel

that this Sentinel voted as leader. */

uint64_t leader_epoch; /* Epoch of the 'leader' field. */

uint64_t failover_epoch; /* Epoch of the currently started failover. */

int failover_state; /* See is-master-down-by-addr* defines. */

mstime_t failover_state_change_time;

mstime_t failover_start_time; /* Last failover attempt start time. */

mstime_t failover_timeout; /* Max time to refresh failover state. */

mstime_t failover_delay_logged; /* For what failover_start_time value we

logged the failover delay. */

struct sentinelRedisInstance *promoted_slave; /* Promoted slave instance. */

/* Scripts executed to notify admin or reconfigure clients: when they

* are set to NULL no script is executed. */

char *notification_script;

char *client_reconfig_script;

sds info; /* cached INFO output */

} sentinelRedisInstance;

/* Main state. */

struct sentinelState {

char myid[CONFIG_RUN_ID_SIZE+1]; /* This sentinel ID. */

uint64_t current_epoch; /* Current epoch. */

dict *masters; /* Dictionary of master sentinelRedisInstances.

Key is the instance name, value is the

sentinelRedisInstance structure pointer. */

int tilt; /* Are we in TILT mode? */

int running_scripts; /* Number of scripts in execution right now. */

mstime_t tilt_start_time; /* When TITL started. */

mstime_t previous_time; /* Last time we ran the time handler. */

list *scripts_queue; /* Queue of user scripts to execute. */

char *announce_ip; /* IP addr that is gossiped to other sentinels if

not NULL. */

int announce_port; /* Port that is gossiped to other sentinels if

non zero. */

unsigned long simfailure_flags; /* Failures simulation. */

int deny_scripts_reconfig; /* Allow SENTINEL SET ... to change script

paths at runtime? */

} sentinel;

/* A script execution job. */

typedef struct sentinelScriptJob {

int flags; /* Script job flags: SENTINEL_SCRIPT_* */

int retry_num; /* Number of times we tried to execute it. */

char **argv; /* Arguments to call the script. */

mstime_t start_time; /* Script execution time if the script is running,

otherwise 0 if we are allowed to retry the

execution at any time. If the script is not

running and it's not 0, it means: do not run

before the specified time. */

pid_t pid; /* Script execution pid. */

} sentinelScriptJob;

/* ======================= hiredis ae.c adapters =============================

* Note: this implementation is taken from hiredis/adapters/ae.h, however

* we have our modified copy for Sentinel in order to use our allocator

* and to have full control over how the adapter works. */

typedef struct redisAeEvents {

redisAsyncContext *context;

aeEventLoop *loop;

int fd;

int reading, writing;

} redisAeEvents;

/**

* 这是 sentinel异步连接服务的 读取数据的事件的封装

* @param el

* @param fd

* @param privdata

* @param mask

*/

static void redisAeReadEvent(aeEventLoop *el, int fd, void *privdata, int mask) {

((void)el); ((void)fd); ((void)mask);

redisAeEvents *e = (redisAeEvents*)privdata;

redisAsyncHandleRead(e->context);

}

static void redisAeWriteEvent(aeEventLoop *el, int fd, void *privdata, int mask) {

((void)el); ((void)fd); ((void)mask);

redisAeEvents *e = (redisAeEvents*)privdata;

redisAsyncHandleWrite(e->context);

}

static void redisAeAddRead(void *privdata) {

redisAeEvents *e = (redisAeEvents*)privdata;

aeEventLoop *loop = e->loop;

if (!e->reading) {

e->reading = 1;

aeCreateFileEvent(loop, e->fd, AE_READABLE, redisAeReadEvent, e);

}

}

static void redisAeDelRead(void *privdata) {

redisAeEvents *e = (redisAeEvents*)privdata;

aeEventLoop *loop = e->loop;

if (e->reading) {

e->reading = 0;

aeDeleteFileEvent(loop,e->fd,AE_READABLE);

}

}

static void redisAeAddWrite(void *privdata) {

redisAeEvents *e = (redisAeEvents*)privdata;

aeEventLoop *loop = e->loop;

if (!e->writing) {

e->writing = 1;

aeCreateFileEvent(loop, e->fd, AE_WRITABLE, redisAeWriteEvent, e);

}

}

static void redisAeDelWrite(void *privdata) {

redisAeEvents *e = (redisAeEvents*)privdata;

aeEventLoop *loop = e->loop;

if (e->writing) {

e->writing = 0;

aeDeleteFileEvent(loop,e->fd,AE_WRITABLE);

}

}

static void redisAeCleanup(void *privdata) {

redisAeEvents *e = (redisAeEvents*)privdata;

redisAeDelRead(privdata);

redisAeDelWrite(privdata);

zfree(e);

}

/**

* 设置回调函数处理

*/

static int redisAeAttach(aeEventLoop *loop, redisAsyncContext *ac) {

redisContext *c = &(ac->c);

redisAeEvents *e;

/* Nothing should be attached when something is already attached */

if (ac->ev.data != NULL)

return C_ERR;

/* Create container for context and r/w events */

e = (redisAeEvents*)zmalloc(sizeof(*e));

e->context = ac;

e->loop = loop;

e->fd = c->fd;

e->reading = e->writing = 0;

/* Register functions to startup / stop listening for events */

// 什么调用呢 ??????? 一直没有找到

ac->ev.addRead = redisAeAddRead;

ac->ev.delRead = redisAeDelRead;

ac->ev.addWrite = redisAeAddWrite;

ac->ev.delWrite = redisAeDelWrite;

ac->ev.cleanup = redisAeCleanup;

ac->ev.data = e;

return C_OK;

}

/* ============================= Prototypes ================================= */

void sentinelLinkEstablishedCallback(const redisAsyncContext *c, int status);

void sentinelDisconnectCallback(const redisAsyncContext *c, int status);

void sentinelReceiveHelloMessages(redisAsyncContext *c, void *reply, void *privdata);

sentinelRedisInstance *sentinelGetMasterByName(char *name);

char *sentinelGetSubjectiveLeader(sentinelRedisInstance *master);

char *sentinelGetObjectiveLeader(sentinelRedisInstance *master);

int yesnotoi(char *s);

void instanceLinkConnectionError(const redisAsyncContext *c);

const char *sentinelRedisInstanceTypeStr(sentinelRedisInstance *ri);

void sentinelAbortFailover(sentinelRedisInstance *ri);

void sentinelEvent(int level, char *type, sentinelRedisInstance *ri, const char *fmt, ...);

sentinelRedisInstance *sentinelSelectSlave(sentinelRedisInstance *master);

void sentinelScheduleScriptExecution(char *path, ...);

void sentinelStartFailover(sentinelRedisInstance *master);

void sentinelDiscardReplyCallback(redisAsyncContext *c, void *reply, void *privdata);

int sentinelSendSlaveOf(sentinelRedisInstance *ri, char *host, int port);

char *sentinelVoteLeader(sentinelRedisInstance *master, uint64_t req_epoch, char *req_runid, uint64_t *leader_epoch);

void sentinelFlushConfig(void);

void sentinelGenerateInitialMonitorEvents(void);

int sentinelSendPing(sentinelRedisInstance *ri);

int sentinelForceHelloUpdateForMaster(sentinelRedisInstance *master);

sentinelRedisInstance *getSentinelRedisInstanceByAddrAndRunID(dict *instances, char *ip, int port, char *runid);

void sentinelSimFailureCrash(void);

/* ========================= Dictionary types =============================== */

uint64_t dictSdsHash(const void *key);

uint64_t dictSdsCaseHash(const void *key);

int dictSdsKeyCompare(void *privdata, const void *key1, const void *key2);

int dictSdsKeyCaseCompare(void *privdata, const void *key1, const void *key2);

void releaseSentinelRedisInstance(sentinelRedisInstance *ri);

void dictInstancesValDestructor (void *privdata, void *obj) {

UNUSED(privdata);

releaseSentinelRedisInstance(obj);

}

/* Instance name (sds) -> instance (sentinelRedisInstance pointer)

*

* also used for: sentinelRedisInstance->sentinels dictionary that maps

* sentinels ip:port to last seen time in Pub/Sub hello message. */

dictType instancesDictType = {

dictSdsHash, /* hash function */

NULL, /* key dup */

NULL, /* val dup */

dictSdsKeyCompare, /* key compare */

NULL, /* key destructor */

dictInstancesValDestructor /* val destructor */

};

/* Instance runid (sds) -> votes (long casted to void*)

*

* This is useful into sentinelGetObjectiveLeader() function in order to

* count the votes and understand who is the leader. */

dictType leaderVotesDictType = {

dictSdsHash, /* hash function */

NULL, /* key dup */

NULL, /* val dup */

dictSdsKeyCompare, /* key compare */

NULL, /* key destructor */

NULL /* val destructor */

};

/* Instance renamed commands table. */

dictType renamedCommandsDictType = {

dictSdsCaseHash, /* hash function */

NULL, /* key dup */

NULL, /* val dup */

dictSdsKeyCaseCompare, /* key compare */

dictSdsDestructor, /* key destructor */

dictSdsDestructor /* val destructor */

};

/* =========================== Initialization =============================== */

void sentinelCommand(client *c);

void sentinelInfoCommand(client *c);

void sentinelSetCommand(client *c);

void sentinelPublishCommand(client *c);

void sentinelRoleCommand(client *c);

struct redisCommand sentinelcmds[] = {

{"ping",pingCommand,1,"",0,NULL,0,0,0,0,0},

{"sentinel",sentinelCommand,-2,"",0,NULL,0,0,0,0,0},

{"subscribe",subscribeCommand,-2,"",0,NULL,0,0,0,0,0}, // 订阅信息

{"unsubscribe",unsubscribeCommand,-1,"",0,NULL,0,0,0,0,0},

{"psubscribe",psubscribeCommand,-2,"",0,NULL,0,0,0,0,0},

{"punsubscribe",punsubscribeCommand,-1,"",0,NULL,0,0,0,0,0},

{"publish",sentinelPublishCommand,3,"",0,NULL,0,0,0,0,0},

{"info",sentinelInfoCommand,-1,"",0,NULL,0,0,0,0,0},

{"role",sentinelRoleCommand,1,"l",0,NULL,0,0,0,0,0},

{"client",clientCommand,-2,"rs",0,NULL,0,0,0,0,0},

{"shutdown",shutdownCommand,-1,"",0,NULL,0,0,0,0,0}

};

/* This function overwrites a few normal Redis config default with Sentinel

* specific defaults. */

void initSentinelConfig(void) {

server.port = REDIS_SENTINEL_PORT;

}

/* Perform the Sentinel mode initialization. */

void initSentinel(void) {

unsigned int j;

/* Remove usual Redis commands from the command table, then just add

* the SENTINEL command. */

dictEmpty(server.commands,NULL);

for (j = 0; j < sizeof(sentinelcmds)/sizeof(sentinelcmds[0]); j++)

{

int retval;

struct redisCommand *cmd = sentinelcmds+j;

retval = dictAdd(server.commands, sdsnew(cmd->name), cmd);

serverAssert(retval == DICT_OK);

}

/* Initialize various data structures. */

sentinel.current_epoch = 0;

sentinel.masters = dictCreate(&instancesDictType,NULL);

sentinel.tilt = 0;

sentinel.tilt_start_time = 0;

sentinel.previous_time = mstime();

sentinel.running_scripts = 0;

sentinel.scripts_queue = listCreate();

sentinel.announce_ip = NULL;

sentinel.announce_port = 0;

sentinel.simfailure_flags = SENTINEL_SIMFAILURE_NONE;

sentinel.deny_scripts_reconfig = SENTINEL_DEFAULT_DENY_SCRIPTS_RECONFIG;

memset(sentinel.myid,0,sizeof(sentinel.myid));

}

/* This function gets called when the server is in Sentinel mode, started,

* loaded the configuration, and is ready for normal operations. */

void sentinelIsRunning(void) {

int j;

if (server.configfile == NULL) {

serverLog(LL_WARNING,

"Sentinel started without a config file. Exiting...");

exit(1);

} else if (access(server.configfile,W_OK) == -1) {

serverLog(LL_WARNING,

"Sentinel config file %s is not writable: %s. Exiting...",

server.configfile,strerror(errno));

exit(1);

}

/* If this Sentinel has yet no ID set in the configuration file, we

* pick a random one and persist the config on disk. From now on this

* will be this Sentinel ID across restarts. */

for (j = 0; j < CONFIG_RUN_ID_SIZE; j++)

if (sentinel.myid[j] != 0) break;

if (j == CONFIG_RUN_ID_SIZE) {

/* Pick ID and persist the config. */

getRandomHexChars(sentinel.myid,CONFIG_RUN_ID_SIZE);

sentinelFlushConfig();

}

/* Log its ID to make debugging of issues simpler. */

serverLog(LL_WARNING,"Sentinel ID is %s", sentinel.myid);

/* We want to generate a +monitor event for every configured master

* at startup. */

sentinelGenerateInitialMonitorEvents();

}

/* ============================== sentinelAddr ============================== */

/* Create a sentinelAddr object and return it on success.

* On error NULL is returned and errno is set to:

* ENOENT: Can't resolve the hostname.

* EINVAL: Invalid port number.

*/

sentinelAddr *createSentinelAddr(char *hostname, int port) {

char ip[NET_IP_STR_LEN];

sentinelAddr *sa;

if (port < 0 || port > 65535) {

errno = EINVAL;

return NULL;

}

if (anetResolve(NULL,hostname,ip,sizeof(ip)) == ANET_ERR) {

errno = ENOENT;

return NULL;

}

sa = zmalloc(sizeof(*sa));

sa->ip = sdsnew(ip);

sa->port = port;

return sa;

}

/* Return a duplicate of the source address. */

sentinelAddr *dupSentinelAddr(sentinelAddr *src) {

sentinelAddr *sa;

sa = zmalloc(sizeof(*sa));

sa->ip = sdsnew(src->ip);

sa->port = src->port;

return sa;

}

/* Free a Sentinel address. Can't fail. */

void releaseSentinelAddr(sentinelAddr *sa) {

sdsfree(sa->ip);

zfree(sa);

}

/* Return non-zero if two addresses are equal. */

int sentinelAddrIsEqual(sentinelAddr *a, sentinelAddr *b) {

return a->port == b->port && !strcasecmp(a->ip,b->ip);

}

/* =========================== Events notification ========================== */

/* Send an event to log, pub/sub, user notification script.

*

* 'level' is the log level for logging. Only LL_WARNING events will trigger

* the execution of the user notification script.

*

* 'type' is the message type, also used as a pub/sub channel name.

*

* 'ri', is the redis instance target of this event if applicable, and is

* used to obtain the path of the notification script to execute.

*

* The remaining arguments are printf-alike.

* If the format specifier starts with the two characters "%@" then ri is

* not NULL, and the message is prefixed with an instance identifier in the

* following format:

*

* <instance type> <instance name> <ip> <port>

*

* If the instance type is not master, than the additional string is

* added to specify the originating master:

*

* @ <master name> <master ip> <master port>

*

* Any other specifier after "%@" is processed by printf itself.

*/

void sentinelEvent(int level, char *type, sentinelRedisInstance *ri,

const char *fmt, ...) {

va_list ap;

char msg[LOG_MAX_LEN];

robj *channel, *payload;

/* Handle %@ */

if (fmt[0] == '%' && fmt[1] == '@') {

sentinelRedisInstance *master = (ri->flags & SRI_MASTER) ?

NULL : ri->master;

if (master) {

snprintf(msg, sizeof(msg), "%s %s %s %d @ %s %s %d", sentinelRedisInstanceTypeStr(ri), ri->name, ri->addr->ip, ri->addr->port, master->name, master->addr->ip, master->addr->port);

} else {

snprintf(msg, sizeof(msg), "%s %s %s %d", sentinelRedisInstanceTypeStr(ri), ri->name, ri->addr->ip, ri->addr->port);

}

fmt += 2;

} else {

msg[0] = '\0';

}

/* Use vsprintf for the rest of the formatting if any. */

if (fmt[0] != '\0') {

va_start(ap, fmt);

vsnprintf(msg+strlen(msg), sizeof(msg)-strlen(msg), fmt, ap);

va_end(ap);

}

/* Log the message if the log level allows it to be logged. */

if (level >= server.verbosity)

serverLog(level,"%s %s",type,msg);

/* Publish the message via Pub/Sub if it's not a debugging one. */

if (level != LL_DEBUG) {

channel = createStringObject(type,strlen(type));

payload = createStringObject(msg,strlen(msg));

pubsubPublishMessage(channel,payload);

decrRefCount(channel);

decrRefCount(payload);

}

/* Call the notification script if applicable. */

if (level == LL_WARNING && ri != NULL) {

sentinelRedisInstance *master = (ri->flags & SRI_MASTER) ?

ri : ri->master;

if (master && master->notification_script) {

sentinelScheduleScriptExecution(master->notification_script,

type,msg,NULL);

}

}

}

/* This function is called only at startup and is used to generate a

* +monitor event for every configured master. The same events are also

* generated when a master to monitor is added at runtime via the

* SENTINEL MONITOR command. */

void sentinelGenerateInitialMonitorEvents(void) {

dictIterator *di;

dictEntry *de;

di = dictGetIterator(sentinel.masters);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

sentinelEvent(LL_WARNING,"+monitor",ri,"%@ quorum %d",ri->quorum);

}

dictReleaseIterator(di);

}

/* ============================ script execution ============================ */

/* Release a script job structure and all the associated data. */

void sentinelReleaseScriptJob(sentinelScriptJob *sj) {

int j = 0;

while(sj->argv[j])

{

sdsfree(sj->argv[j++]);

}

zfree(sj->argv);

zfree(sj);

}

#define SENTINEL_SCRIPT_MAX_ARGS 16

void sentinelScheduleScriptExecution(char *path, ...) {

va_list ap;

char *argv[SENTINEL_SCRIPT_MAX_ARGS+1];

int argc = 1;

sentinelScriptJob *sj;

va_start(ap, path);

while(argc < SENTINEL_SCRIPT_MAX_ARGS) {

argv[argc] = va_arg(ap,char*);

if (!argv[argc]) break;

argv[argc] = sdsnew(argv[argc]); /* Copy the string. */

argc++;

}

va_end(ap);

argv[0] = sdsnew(path);

sj = zmalloc(sizeof(*sj));

sj->flags = SENTINEL_SCRIPT_NONE;

sj->retry_num = 0;

sj->argv = zmalloc(sizeof(char*)*(argc+1));

sj->start_time = 0;

sj->pid = 0;

memcpy(sj->argv,argv,sizeof(char*)*(argc+1));

listAddNodeTail(sentinel.scripts_queue,sj);

/* Remove the oldest non running script if we already hit the limit. */

if (listLength(sentinel.scripts_queue) > SENTINEL_SCRIPT_MAX_QUEUE) {

listNode *ln;

listIter li;

listRewind(sentinel.scripts_queue,&li);

while ((ln = listNext(&li)) != NULL) {

sj = ln->value;

if (sj->flags & SENTINEL_SCRIPT_RUNNING) continue;

/* The first node is the oldest as we add on tail. */

listDelNode(sentinel.scripts_queue,ln);

sentinelReleaseScriptJob(sj);

break;

}

serverAssert(listLength(sentinel.scripts_queue) <= SENTINEL_SCRIPT_MAX_QUEUE);

}

}

/* Lookup a script in the scripts queue via pid, and returns the list node

* (so that we can easily remove it from the queue if needed). */

listNode *sentinelGetScriptListNodeByPid(pid_t pid) {

listNode *ln;

listIter li;

listRewind(sentinel.scripts_queue,&li);

while ((ln = listNext(&li)) != NULL) {

sentinelScriptJob *sj = ln->value;

if ((sj->flags & SENTINEL_SCRIPT_RUNNING) && sj->pid == pid)

return ln;

}

return NULL;

}

/* Run pending scripts if we are not already at max number of running

* scripts. */

void sentinelRunPendingScripts(void) {

listNode *ln;

listIter li;

mstime_t now = mstime();

/* Find jobs that are not running and run them, from the top to the

* tail of the queue, so we run older jobs first. */

listRewind(sentinel.scripts_queue,&li);

while (sentinel.running_scripts < SENTINEL_SCRIPT_MAX_RUNNING &&

(ln = listNext(&li)) != NULL)

{

sentinelScriptJob *sj = ln->value;

pid_t pid;

/* Skip if already running. */

if (sj->flags & SENTINEL_SCRIPT_RUNNING) continue;

/* Skip if it's a retry, but not enough time has elapsed. */

if (sj->start_time && sj->start_time > now) continue;

sj->flags |= SENTINEL_SCRIPT_RUNNING;

sj->start_time = mstime();

sj->retry_num++;

pid = fork();

if (pid == -1) {

/* Parent (fork error).

* We report fork errors as signal 99, in order to unify the

* reporting with other kind of errors. */

sentinelEvent(LL_WARNING,"-script-error",NULL,

"%s %d %d", sj->argv[0], 99, 0);

sj->flags &= ~SENTINEL_SCRIPT_RUNNING;

sj->pid = 0;

} else if (pid == 0) {

/* Child */

execve(sj->argv[0],sj->argv,environ);

/* If we are here an error occurred. */

_exit(2); /* Don't retry execution. */

} else {

sentinel.running_scripts++;

sj->pid = pid;

sentinelEvent(LL_DEBUG,"+script-child",NULL,"%ld",(long)pid);

}

}

}

/* How much to delay the execution of a script that we need to retry after

* an error?

*

* We double the retry delay for every further retry we do. So for instance

* if RETRY_DELAY is set to 30 seconds and the max number of retries is 10

* starting from the second attempt to execute the script the delays are:

* 30 sec, 60 sec, 2 min, 4 min, 8 min, 16 min, 32 min, 64 min, 128 min. */

mstime_t sentinelScriptRetryDelay(int retry_num) {

mstime_t delay = SENTINEL_SCRIPT_RETRY_DELAY;

while (retry_num-- > 1) delay *= 2;

return delay;

}

/* Check for scripts that terminated, and remove them from the queue if the

* script terminated successfully. If instead the script was terminated by

* a signal, or returned exit code "1", it is scheduled to run again if

* the max number of retries did not already elapsed. */

void sentinelCollectTerminatedScripts(void) {

int statloc;

pid_t pid;

while ((pid = wait3(&statloc,WNOHANG,NULL)) > 0) {

int exitcode = WEXITSTATUS(statloc);

int bysignal = 0;

listNode *ln;

sentinelScriptJob *sj;

if (WIFSIGNALED(statloc)) bysignal = WTERMSIG(statloc);

sentinelEvent(LL_DEBUG,"-script-child",NULL,"%ld %d %d",

(long)pid, exitcode, bysignal);

ln = sentinelGetScriptListNodeByPid(pid);

if (ln == NULL) {

serverLog(LL_WARNING,"wait3() returned a pid (%ld) we can't find in our scripts execution queue!", (long)pid);

continue;

}

sj = ln->value;

/* If the script was terminated by a signal or returns an

* exit code of "1" (that means: please retry), we reschedule it

* if the max number of retries is not already reached. */

if ((bysignal || exitcode == 1) &&

sj->retry_num != SENTINEL_SCRIPT_MAX_RETRY)

{

sj->flags &= ~SENTINEL_SCRIPT_RUNNING;

sj->pid = 0;

sj->start_time = mstime() +

sentinelScriptRetryDelay(sj->retry_num);

} else {

/* Otherwise let's remove the script, but log the event if the

* execution did not terminated in the best of the ways. */

if (bysignal || exitcode != 0) {

sentinelEvent(LL_WARNING,"-script-error",NULL,

"%s %d %d", sj->argv[0], bysignal, exitcode);

}

listDelNode(sentinel.scripts_queue,ln);

sentinelReleaseScriptJob(sj);

sentinel.running_scripts--;

}

}

}

/* Kill scripts in timeout, they'll be collected by the

* sentinelCollectTerminatedScripts() function. */

void sentinelKillTimedoutScripts(void) {

listNode *ln;

listIter li;

mstime_t now = mstime();

listRewind(sentinel.scripts_queue,&li);

while ((ln = listNext(&li)) != NULL) {

sentinelScriptJob *sj = ln->value;

if (sj->flags & SENTINEL_SCRIPT_RUNNING &&

(now - sj->start_time) > SENTINEL_SCRIPT_MAX_RUNTIME)

{

sentinelEvent(LL_WARNING,"-script-timeout",NULL,"%s %ld",

sj->argv[0], (long)sj->pid);

kill(sj->pid,SIGKILL);

}

}

}

/* Implements SENTINEL PENDING-SCRIPTS command. */

void sentinelPendingScriptsCommand(client *c) {

listNode *ln;

listIter li;

addReplyMultiBulkLen(c,listLength(sentinel.scripts_queue));

listRewind(sentinel.scripts_queue,&li);

while ((ln = listNext(&li)) != NULL) {

sentinelScriptJob *sj = ln->value;

int j = 0;

addReplyMultiBulkLen(c,10);

addReplyBulkCString(c,"argv");

while (sj->argv[j]) j++;

addReplyMultiBulkLen(c,j);

j = 0;

while (sj->argv[j]) addReplyBulkCString(c,sj->argv[j++]);

addReplyBulkCString(c,"flags");

addReplyBulkCString(c,

(sj->flags & SENTINEL_SCRIPT_RUNNING) ? "running" : "scheduled");

addReplyBulkCString(c,"pid");

addReplyBulkLongLong(c,sj->pid);

if (sj->flags & SENTINEL_SCRIPT_RUNNING) {

addReplyBulkCString(c,"run-time");

addReplyBulkLongLong(c,mstime() - sj->start_time);

} else {

mstime_t delay = sj->start_time ? (sj->start_time-mstime()) : 0;

if (delay < 0) delay = 0;

addReplyBulkCString(c,"run-delay");

addReplyBulkLongLong(c,delay);

}

addReplyBulkCString(c,"retry-num");

addReplyBulkLongLong(c,sj->retry_num);

}

}

/* This function calls, if any, the client reconfiguration script with the

* following parameters:

*

* <master-name> <role> <state> <from-ip> <from-port> <to-ip> <to-port>

*

* It is called every time a failover is performed.

*

* <state> is currently always "failover".

* <role> is either "leader" or "observer".

*

* from/to fields are respectively master -> promoted slave addresses for

* "start" and "end". */

void sentinelCallClientReconfScript(sentinelRedisInstance *master, int role, char *state, sentinelAddr *from, sentinelAddr *to) {

char fromport[32], toport[32];

if (master->client_reconfig_script == NULL)

{

return;

}

ll2string(fromport,sizeof(fromport),from->port);

ll2string(toport,sizeof(toport),to->port);

sentinelScheduleScriptExecution(master->client_reconfig_script, master->name, (role == SENTINEL_LEADER) ? "leader" : "observer", state, from->ip, fromport, to->ip, toport, NULL);

}

/* =============================== instanceLink ============================= */

/* Create a not yet connected link object. */

instanceLink *createInstanceLink(void) {

instanceLink *link = zmalloc(sizeof(*link));

link->refcount = 1;

link->disconnected = 1;

link->pending_commands = 0;

link->cc = NULL;

link->pc = NULL;

link->cc_conn_time = 0;

link->pc_conn_time = 0;

link->last_reconn_time = 0;

link->pc_last_activity = 0;

/* We set the act_ping_time to "now" even if we actually don't have yet

* a connection with the node, nor we sent a ping.

* This is useful to detect a timeout in case we'll not be able to connect

* with the node at all. */

link->act_ping_time = mstime();

link->last_ping_time = 0;

link->last_avail_time = mstime();

link->last_pong_time = mstime();

return link;

}

/* Disconnect an hiredis connection in the context of an instance link. */

void instanceLinkCloseConnection(instanceLink *link, redisAsyncContext *c) {

if (c == NULL) return;

if (link->cc == c) {

link->cc = NULL;

link->pending_commands = 0;

}

if (link->pc == c) link->pc = NULL;

c->data = NULL;

link->disconnected = 1;

redisAsyncFree(c);

}

/* Decrement the refcount of a link object, if it drops to zero, actually

* free it and return NULL. Otherwise don't do anything and return the pointer

* to the object.

*

* If we are not going to free the link and ri is not NULL, we rebind all the

* pending requests in link->cc (hiredis connection for commands) to a

* callback that will just ignore them. This is useful to avoid processing

* replies for an instance that no longer exists. */

instanceLink *releaseInstanceLink(instanceLink *link, sentinelRedisInstance *ri)

{

serverAssert(link->refcount > 0);

link->refcount--;

if (link->refcount != 0) {

if (ri && ri->link->cc) {

/* This instance may have pending callbacks in the hiredis async

* context, having as 'privdata' the instance that we are going to

* free. Let's rewrite the callback list, directly exploiting

* hiredis internal data structures, in order to bind them with

* a callback that will ignore the reply at all. */

redisCallback *cb;

redisCallbackList *callbacks = &link->cc->replies;

cb = callbacks->head;

while(cb) {

if (cb->privdata == ri) {

cb->fn = sentinelDiscardReplyCallback;

cb->privdata = NULL; /* Not strictly needed. */

}

cb = cb->next;

}

}

return link; /* Other active users. */

}

instanceLinkCloseConnection(link,link->cc);

instanceLinkCloseConnection(link,link->pc);

zfree(link);

return NULL;

}

/* This function will attempt to share the instance link we already have

* for the same Sentinel in the context of a different master, with the

* instance we are passing as argument.

*

* This way multiple Sentinel objects that refer all to the same physical

* Sentinel instance but in the context of different masters will use

* a single connection, will send a single PING per second for failure

* detection and so forth.

*

* Return C_OK if a matching Sentinel was found in the context of a

* different master and sharing was performed. Otherwise C_ERR

* is returned. */

int sentinelTryConnectionSharing(sentinelRedisInstance *ri) {

serverAssert(ri->flags & SRI_SENTINEL);

dictIterator *di;

dictEntry *de;

if (ri->runid == NULL) return C_ERR; /* No way to identify it. */

if (ri->link->refcount > 1) return C_ERR; /* Already shared. */

di = dictGetIterator(sentinel.masters);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *master = dictGetVal(de), *match;

/* We want to share with the same physical Sentinel referenced

* in other masters, so skip our master. */

if (master == ri->master) continue;

match = getSentinelRedisInstanceByAddrAndRunID(master->sentinels, NULL,0,ri->runid);

if (match == NULL) continue; /* No match. */

if (match == ri) continue; /* Should never happen but... safer. */

/* We identified a matching Sentinel, great! Let's free our link

* and use the one of the matching Sentinel. */

releaseInstanceLink(ri->link,NULL);

ri->link = match->link;

match->link->refcount++;

return C_OK;

}

dictReleaseIterator(di);

return C_ERR;

}

/* When we detect a Sentinel to switch address (reporting a different IP/port

* pair in Hello messages), let's update all the matching Sentinels in the

* context of other masters as well and disconnect the links, so that everybody

* will be updated.

*

* Return the number of updated Sentinel addresses. */

int sentinelUpdateSentinelAddressInAllMasters(sentinelRedisInstance *ri) {

serverAssert(ri->flags & SRI_SENTINEL);

dictIterator *di;

dictEntry *de;

int reconfigured = 0;

di = dictGetIterator(sentinel.masters);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *master = dictGetVal(de), *match;

match = getSentinelRedisInstanceByAddrAndRunID(master->sentinels,

NULL,0,ri->runid);

/* If there is no match, this master does not know about this

* Sentinel, try with the next one. */

if (match == NULL) continue;

/* Disconnect the old links if connected. */

if (match->link->cc != NULL)

instanceLinkCloseConnection(match->link,match->link->cc);

if (match->link->pc != NULL)

instanceLinkCloseConnection(match->link,match->link->pc);

if (match == ri) continue; /* Address already updated for it. */

/* Update the address of the matching Sentinel by copying the address

* of the Sentinel object that received the address update. */

releaseSentinelAddr(match->addr);

match->addr = dupSentinelAddr(ri->addr);

reconfigured++;

}

dictReleaseIterator(di);

if (reconfigured)

sentinelEvent(LL_NOTICE,"+sentinel-address-update", ri,

"%@ %d additional matching instances", reconfigured);

return reconfigured;

}

/* This function is called when an hiredis connection reported an error.

* We set it to NULL and mark the link as disconnected so that it will be

* reconnected again.

*

* Note: we don't free the hiredis context as hiredis will do it for us

* for async connections. */

void instanceLinkConnectionError(const redisAsyncContext *c) {

instanceLink *link = c->data;

int pubsub;

if (!link) return;

pubsub = (link->pc == c);

if (pubsub)

link->pc = NULL;

else

link->cc = NULL;

link->disconnected = 1;

}

/* Hiredis connection established / disconnected callbacks. We need them

* just to cleanup our link state. */

void sentinelLinkEstablishedCallback(const redisAsyncContext *c, int status) {

if (status != C_OK) instanceLinkConnectionError(c);

}

void sentinelDisconnectCallback(const redisAsyncContext *c, int status) {

UNUSED(status);

instanceLinkConnectionError(c);

}

/* ========================== sentinelRedisInstance ========================= */

/* Create a redis instance, the following fields must be populated by the

* caller if needed:

* runid: set to NULL but will be populated once INFO output is received.

* info_refresh: is set to 0 to mean that we never received INFO so far.

*

* If SRI_MASTER is set into initial flags the instance is added to

* sentinel.masters table.

*

* if SRI_SLAVE or SRI_SENTINEL is set then 'master' must be not NULL and the

* instance is added into master->slaves or master->sentinels table.

*

* If the instance is a slave or sentinel, the name parameter is ignored and

* is created automatically as hostname:port.

*

* The function fails if hostname can't be resolved or port is out of range.

* When this happens NULL is returned and errno is set accordingly to the

* createSentinelAddr() function.

*

* The function may also fail and return NULL with errno set to EBUSY if

* a master with the same name, a slave with the same address, or a sentinel

* with the same ID already exists. */

sentinelRedisInstance *createSentinelRedisInstance(char *name, int flags, char *hostname, int port, int quorum, sentinelRedisInstance *master) {

sentinelRedisInstance *ri;

sentinelAddr *addr;

dict *table = NULL;

char slavename[NET_PEER_ID_LEN], *sdsname;

serverAssert(flags & (SRI_MASTER|SRI_SLAVE|SRI_SENTINEL));

serverAssert((flags & SRI_MASTER) || master != NULL);

/* Check address validity. */

addr = createSentinelAddr(hostname,port);

if (addr == NULL)

{

return NULL;

}

/* For slaves use ip:port as name. */

if (flags & SRI_SLAVE) {

anetFormatAddr(slavename, sizeof(slavename), hostname, port);

name = slavename;

}

/* Make sure the entry is not duplicated. This may happen when the same

* name for a master is used multiple times inside the configuration or

* if we try to add multiple times a slave or sentinel with same ip/port

* to a master. */

if (flags & SRI_MASTER)

{

table = sentinel.masters; // master 配置表 还有宕机情况?????宕机的情况是怎么处理呢

}

else if (flags & SRI_SLAVE)

{

table = master->slaves;

}

else if (flags & SRI_SENTINEL)

{

table = master->sentinels;

}

sdsname = sdsnew(name);

if (dictFind(table,sdsname)) {

releaseSentinelAddr(addr);

sdsfree(sdsname);

errno = EBUSY;

return NULL;

}

/* Create the instance object. */

ri = zmalloc(sizeof(*ri));

/* Note that all the instances are started in the disconnected state,

* the event loop will take care of connecting them. */

ri->flags = flags;

ri->name = sdsname;

ri->runid = NULL;

ri->config_epoch = 0;

ri->addr = addr;

ri->link = createInstanceLink();

ri->last_pub_time = mstime();

ri->last_hello_time = mstime();

ri->last_master_down_reply_time = mstime();

ri->s_down_since_time = 0;

ri->o_down_since_time = 0;

ri->down_after_period = master ? master->down_after_period :

SENTINEL_DEFAULT_DOWN_AFTER;

ri->master_link_down_time = 0;

ri->auth_pass = NULL;

ri->slave_priority = SENTINEL_DEFAULT_SLAVE_PRIORITY;

ri->slave_reconf_sent_time = 0;

ri->slave_master_host = NULL;

ri->slave_master_port = 0;

ri->slave_master_link_status = SENTINEL_MASTER_LINK_STATUS_DOWN;

ri->slave_repl_offset = 0;

ri->sentinels = dictCreate(&instancesDictType,NULL);

ri->quorum = quorum;

ri->parallel_syncs = SENTINEL_DEFAULT_PARALLEL_SYNCS;

ri->master = master;

ri->slaves = dictCreate(&instancesDictType,NULL);

ri->info_refresh = 0;

ri->renamed_commands = dictCreate(&renamedCommandsDictType,NULL);

/* Failover state. */

ri->leader = NULL;

ri->leader_epoch = 0;

ri->failover_epoch = 0;

ri->failover_state = SENTINEL_FAILOVER_STATE_NONE;

ri->failover_state_change_time = 0;

ri->failover_start_time = 0;

ri->failover_timeout = SENTINEL_DEFAULT_FAILOVER_TIMEOUT;

ri->failover_delay_logged = 0;

ri->promoted_slave = NULL;

ri->notification_script = NULL;

ri->client_reconfig_script = NULL;

ri->info = NULL;

/* Role */

ri->role_reported = ri->flags & (SRI_MASTER|SRI_SLAVE);

ri->role_reported_time = mstime();

ri->slave_conf_change_time = mstime();

/* Add into the right table. */

dictAdd(table, ri->name, ri);

return ri;

}

/* Release this instance and all its slaves, sentinels, hiredis connections.

* This function does not take care of unlinking the instance from the main

* masters table (if it is a master) or from its master sentinels/slaves table

* if it is a slave or sentinel. */

void releaseSentinelRedisInstance(sentinelRedisInstance *ri) {

/* Release all its slaves or sentinels if any. */

dictRelease(ri->sentinels);

dictRelease(ri->slaves);

/* Disconnect the instance. */

releaseInstanceLink(ri->link,ri);

/* Free other resources. */

sdsfree(ri->name);

sdsfree(ri->runid);

sdsfree(ri->notification_script);

sdsfree(ri->client_reconfig_script);

sdsfree(ri->slave_master_host);

sdsfree(ri->leader);

sdsfree(ri->auth_pass);

sdsfree(ri->info);

releaseSentinelAddr(ri->addr);

dictRelease(ri->renamed_commands);

/* Clear state into the master if needed. */

if ((ri->flags & SRI_SLAVE) && (ri->flags & SRI_PROMOTED) && ri->master)

ri->master->promoted_slave = NULL;

zfree(ri);

}

/* Lookup a slave in a master Redis instance, by ip and port. */

sentinelRedisInstance *sentinelRedisInstanceLookupSlave(

sentinelRedisInstance *ri, char *ip, int port)

{

sds key;

sentinelRedisInstance *slave;

char buf[NET_PEER_ID_LEN];

serverAssert(ri->flags & SRI_MASTER);

anetFormatAddr(buf,sizeof(buf),ip,port);

key = sdsnew(buf);

slave = dictFetchValue(ri->slaves,key);

sdsfree(key);

return slave;

}

/* Return the name of the type of the instance as a string. */

const char *sentinelRedisInstanceTypeStr(sentinelRedisInstance *ri) {

if (ri->flags & SRI_MASTER) return "master";

else if (ri->flags & SRI_SLAVE) return "slave";

else if (ri->flags & SRI_SENTINEL) return "sentinel";

else return "unknown";

}

/* This function remove the Sentinel with the specified ID from the

* specified master.

*

* If "runid" is NULL the function returns ASAP.

*

* This function is useful because on Sentinels address switch, we want to

* remove our old entry and add a new one for the same ID but with the new

* address.

*

* The function returns 1 if the matching Sentinel was removed, otherwise

* 0 if there was no Sentinel with this ID. */

int removeMatchingSentinelFromMaster(sentinelRedisInstance *master, char *runid) {

dictIterator *di;

dictEntry *de;

int removed = 0;

if (runid == NULL) return 0;

di = dictGetSafeIterator(master->sentinels);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->runid && strcmp(ri->runid,runid) == 0) {

dictDelete(master->sentinels,ri->name);

removed++;

}

}

dictReleaseIterator(di);

return removed;

}

/* Search an instance with the same runid, ip and port into a dictionary

* of instances. Return NULL if not found, otherwise return the instance

* pointer.

*

* runid or ip can be NULL. In such a case the search is performed only

* by the non-NULL field. */

sentinelRedisInstance *getSentinelRedisInstanceByAddrAndRunID(dict *instances, char *ip, int port, char *runid) {

dictIterator *di;

dictEntry *de;

sentinelRedisInstance *instance = NULL;

serverAssert(ip || runid); /* User must pass at least one search param. */

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (runid && !ri->runid) continue;

if ((runid == NULL || strcmp(ri->runid, runid) == 0) &&

(ip == NULL || (strcmp(ri->addr->ip, ip) == 0 &&

ri->addr->port == port)))

{

instance = ri;

break;

}

}

dictReleaseIterator(di);

return instance;

}

/* Master lookup by name */

sentinelRedisInstance *sentinelGetMasterByName(char *name) {

sentinelRedisInstance *ri;

sds sdsname = sdsnew(name);

ri = dictFetchValue(sentinel.masters,sdsname);

sdsfree(sdsname);

return ri;

}

/* Add the specified flags to all the instances in the specified dictionary. */

void sentinelAddFlagsToDictOfRedisInstances(dict *instances, int flags) {

dictIterator *di;

dictEntry *de;

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

ri->flags |= flags;

}

dictReleaseIterator(di);

}

/* Remove the specified flags to all the instances in the specified

* dictionary. */

void sentinelDelFlagsToDictOfRedisInstances(dict *instances, int flags) {

dictIterator *di;

dictEntry *de;

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

ri->flags &= ~flags;

}

dictReleaseIterator(di);

}

/* Reset the state of a monitored master:

* 1) Remove all slaves.

* 2) Remove all sentinels.

* 3) Remove most of the flags resulting from runtime operations.

* 4) Reset timers to their default value. For example after a reset it will be

* possible to failover again the same master ASAP, without waiting the

* failover timeout delay.

* 5) In the process of doing this undo the failover if in progress.

* 6) Disconnect the connections with the master (will reconnect automatically).

*/

#define SENTINEL_RESET_NO_SENTINELS (1<<0)

void sentinelResetMaster(sentinelRedisInstance *ri, int flags) {

serverAssert(ri->flags & SRI_MASTER);

dictRelease(ri->slaves);

ri->slaves = dictCreate(&instancesDictType,NULL);

if (!(flags & SENTINEL_RESET_NO_SENTINELS)) {

dictRelease(ri->sentinels);

ri->sentinels = dictCreate(&instancesDictType,NULL);

}

instanceLinkCloseConnection(ri->link,ri->link->cc);

instanceLinkCloseConnection(ri->link,ri->link->pc);

ri->flags &= SRI_MASTER;

if (ri->leader) {

sdsfree(ri->leader);

ri->leader = NULL;

}

ri->failover_state = SENTINEL_FAILOVER_STATE_NONE;

ri->failover_state_change_time = 0;

ri->failover_start_time = 0; /* We can failover again ASAP. */

ri->promoted_slave = NULL;

sdsfree(ri->runid);

sdsfree(ri->slave_master_host);

ri->runid = NULL;

ri->slave_master_host = NULL;

ri->link->act_ping_time = mstime();

ri->link->last_ping_time = 0;

ri->link->last_avail_time = mstime();

ri->link->last_pong_time = mstime();

ri->role_reported_time = mstime();

ri->role_reported = SRI_MASTER;

if (flags & SENTINEL_GENERATE_EVENT)

sentinelEvent(LL_WARNING,"+reset-master",ri,"%@");

}

/* Call sentinelResetMaster() on every master with a name matching the specified

* pattern. */

int sentinelResetMastersByPattern(char *pattern, int flags) {

dictIterator *di;

dictEntry *de;

int reset = 0;

di = dictGetIterator(sentinel.masters);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->name) {

if (stringmatch(pattern,ri->name,0)) {

sentinelResetMaster(ri,flags);

reset++;

}

}

}

dictReleaseIterator(di);

return reset;

}

/* Reset the specified master with sentinelResetMaster(), and also change

* the ip:port address, but take the name of the instance unmodified.

*

* This is used to handle the +switch-master event.

*

* The function returns C_ERR if the address can't be resolved for some

* reason. Otherwise C_OK is returned. */

int sentinelResetMasterAndChangeAddress(sentinelRedisInstance *master, char *ip, int port) {

sentinelAddr *oldaddr, *newaddr;

sentinelAddr **slaves = NULL;

int numslaves = 0, j;

dictIterator *di;

dictEntry *de;

newaddr = createSentinelAddr(ip,port);

if (newaddr == NULL)

{

return C_ERR;

}

/* Make a list of slaves to add back after the reset.

* Don't include the one having the address we are switching to. */

di = dictGetIterator(master->slaves);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *slave = dictGetVal(de);

if (sentinelAddrIsEqual(slave->addr,newaddr))

{

continue;

}

slaves = zrealloc(slaves,sizeof(sentinelAddr*)*(numslaves+1));

slaves[numslaves++] = createSentinelAddr(slave->addr->ip, slave->addr->port);

}

dictReleaseIterator(di);

/* If we are switching to a different address, include the old address

* as a slave as well, so that we'll be able to sense / reconfigure

* the old master. */

if (!sentinelAddrIsEqual(newaddr,master->addr)) {

slaves = zrealloc(slaves,sizeof(sentinelAddr*)*(numslaves+1));

slaves[numslaves++] = createSentinelAddr(master->addr->ip,

master->addr->port);

}

/* Reset and switch address. */

sentinelResetMaster(master,SENTINEL_RESET_NO_SENTINELS);

oldaddr = master->addr;

master->addr = newaddr;

master->o_down_since_time = 0;

master->s_down_since_time = 0;

/* Add slaves back. */

for (j = 0; j < numslaves; j++) {

sentinelRedisInstance *slave;

slave = createSentinelRedisInstance(NULL,SRI_SLAVE,slaves[j]->ip, slaves[j]->port, master->quorum, master);

releaseSentinelAddr(slaves[j]);

if (slave)

{

sentinelEvent(LL_NOTICE,"+slave",slave,"%@");

}

}

zfree(slaves);

/* Release the old address at the end so we are safe even if the function

* gets the master->addr->ip and master->addr->port as arguments. */

releaseSentinelAddr(oldaddr);

sentinelFlushConfig();

return C_OK;

}

/* Return non-zero if there was no SDOWN or ODOWN error associated to this

* instance in the latest 'ms' milliseconds. */

int sentinelRedisInstanceNoDownFor(sentinelRedisInstance *ri, mstime_t ms) {

mstime_t most_recent;

most_recent = ri->s_down_since_time;

if (ri->o_down_since_time > most_recent)

most_recent = ri->o_down_since_time;

return most_recent == 0 || (mstime() - most_recent) > ms;

}

/* Return the current master address, that is, its address or the address

* of the promoted slave if already operational. */

sentinelAddr *sentinelGetCurrentMasterAddress(sentinelRedisInstance *master) {

/* If we are failing over the master, and the state is already

* SENTINEL_FAILOVER_STATE_RECONF_SLAVES or greater, it means that we

* already have the new configuration epoch in the master, and the

* slave acknowledged the configuration switch. Advertise the new

* address. */

if ((master->flags & SRI_FAILOVER_IN_PROGRESS) &&

master->promoted_slave &&

master->failover_state >= SENTINEL_FAILOVER_STATE_RECONF_SLAVES)

{

return master->promoted_slave->addr;

} else {

return master->addr;

}

}

/* This function sets the down_after_period field value in 'master' to all

* the slaves and sentinel instances connected to this master. */

void sentinelPropagateDownAfterPeriod(sentinelRedisInstance *master) {

dictIterator *di;

dictEntry *de;

int j;

dict *d[] = {master->slaves, master->sentinels, NULL};

for (j = 0; d[j]; j++) {

di = dictGetIterator(d[j]);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

ri->down_after_period = master->down_after_period;

}

dictReleaseIterator(di);

}

}

char *sentinelGetInstanceTypeString(sentinelRedisInstance *ri) {

if (ri->flags & SRI_MASTER) return "master";

else if (ri->flags & SRI_SLAVE) return "slave";

else if (ri->flags & SRI_SENTINEL) return "sentinel";

else return "unknown";

}

/* This function is used in order to send commands to Redis instances: the

* commands we send from Sentinel may be renamed, a common case is a master

* with CONFIG and SLAVEOF commands renamed for security concerns. In that

* case we check the ri->renamed_command table (or if the instance is a slave,

* we check the one of the master), and map the command that we should send

* to the set of renamed commads. However, if the command was not renamed,

* we just return "command" itself. */

char *sentinelInstanceMapCommand(sentinelRedisInstance *ri, char *command) {

sds sc = sdsnew(command);

if (ri->master) ri = ri->master;

char *retval = dictFetchValue(ri->renamed_commands, sc);

sdsfree(sc);

return retval ? retval : command;

}

/* ============================ Config handling ============================= */

char *sentinelHandleConfiguration(char **argv, int argc) {

sentinelRedisInstance *ri;

if (!strcasecmp(argv[0],"monitor") && argc == 5) {

/* monitor <name> <host> <port> <quorum> */

int quorum = atoi(argv[4]);

if (quorum <= 0) return "Quorum must be 1 or greater.";

// create master 保存

if (createSentinelRedisInstance(argv[1],SRI_MASTER,argv[2], atoi(argv[3]),quorum,NULL) == NULL)

{

switch(errno) {

case EBUSY: return "Duplicated master name.";

case ENOENT: return "Can't resolve master instance hostname.";

case EINVAL: return "Invalid port number";

}

}

} else if (!strcasecmp(argv[0],"down-after-milliseconds") && argc == 3) {

/* down-after-milliseconds <name> <milliseconds> */

ri = sentinelGetMasterByName(argv[1]);

if (!ri) return "No such master with specified name.";

ri->down_after_period = atoi(argv[2]);

if (ri->down_after_period <= 0)

return "negative or zero time parameter.";

sentinelPropagateDownAfterPeriod(ri);

} else if (!strcasecmp(argv[0],"failover-timeout") && argc == 3) {

/* failover-timeout <name> <milliseconds> */